Templates for Data Structures and Algorithms

Contents

| Symbol | Designation |

|---|---|

| ✓ | This is a problem from LeetCode's interview crash course. |

| ✠ | This is a problem stemming from work done through Interviewing IO. |

| ★ | A right-aligned ★ (one or more) indicates my own personal designation as to the problem's relevance, importance, priority in review, etc. |

- Backtracking

- Binary search

- Dynamic programming

- Graphs

- Greedy algorithms

- Heaps

- Linked lists

- Matrices

- Sliding window

- Stacks and queues

- Trees

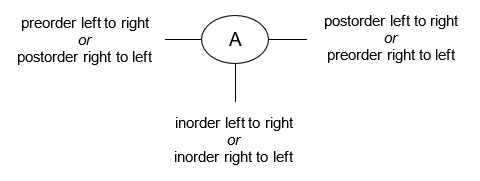

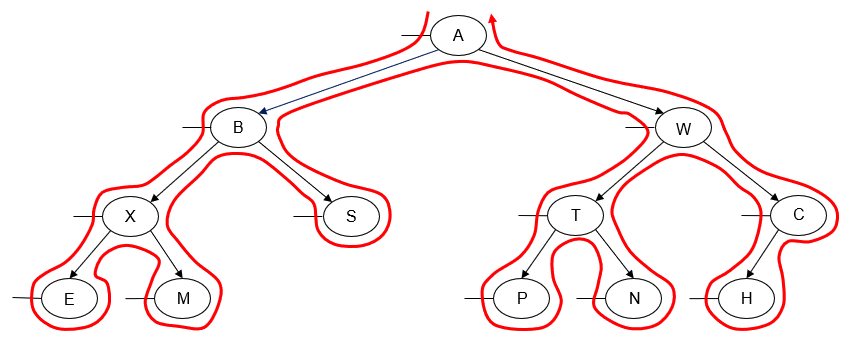

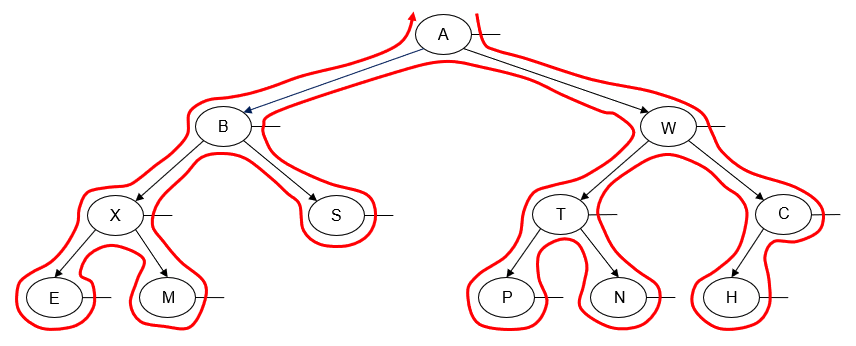

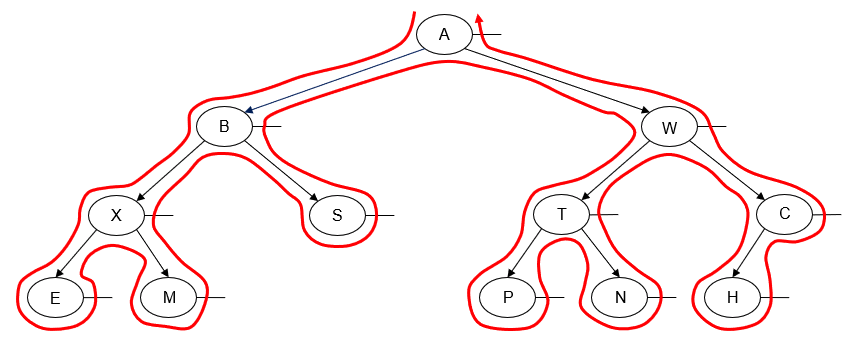

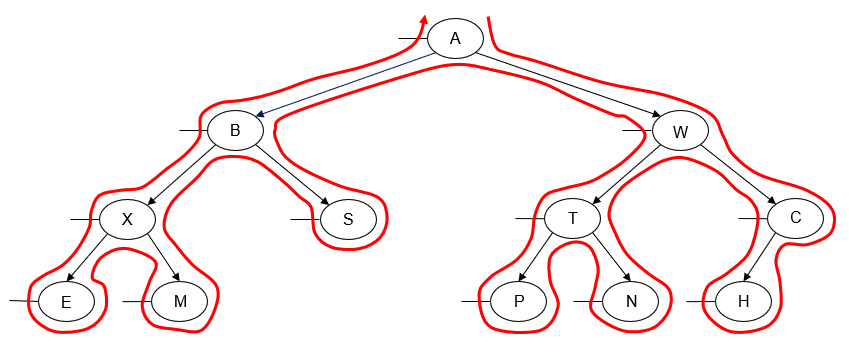

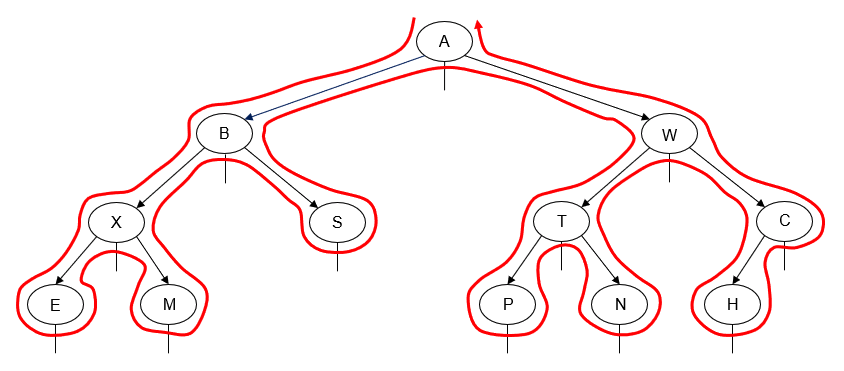

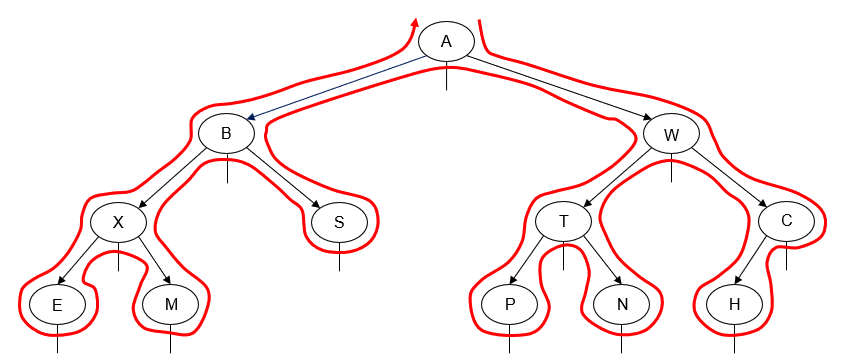

- Manually determine order of nodes visited ("tick trick")

- Pre-order traversal

- Post-order traversal

- In-order traversal

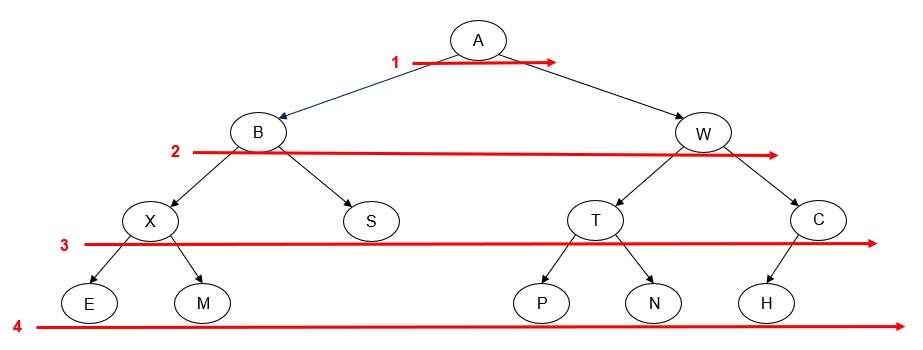

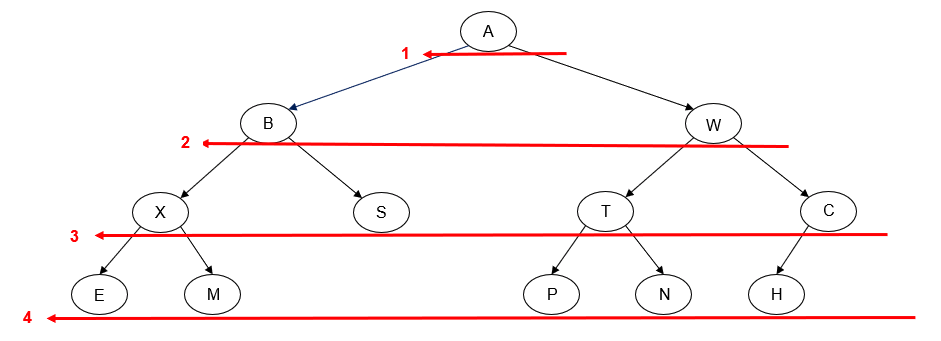

- Level-order traversal

- Level-order (BFS)

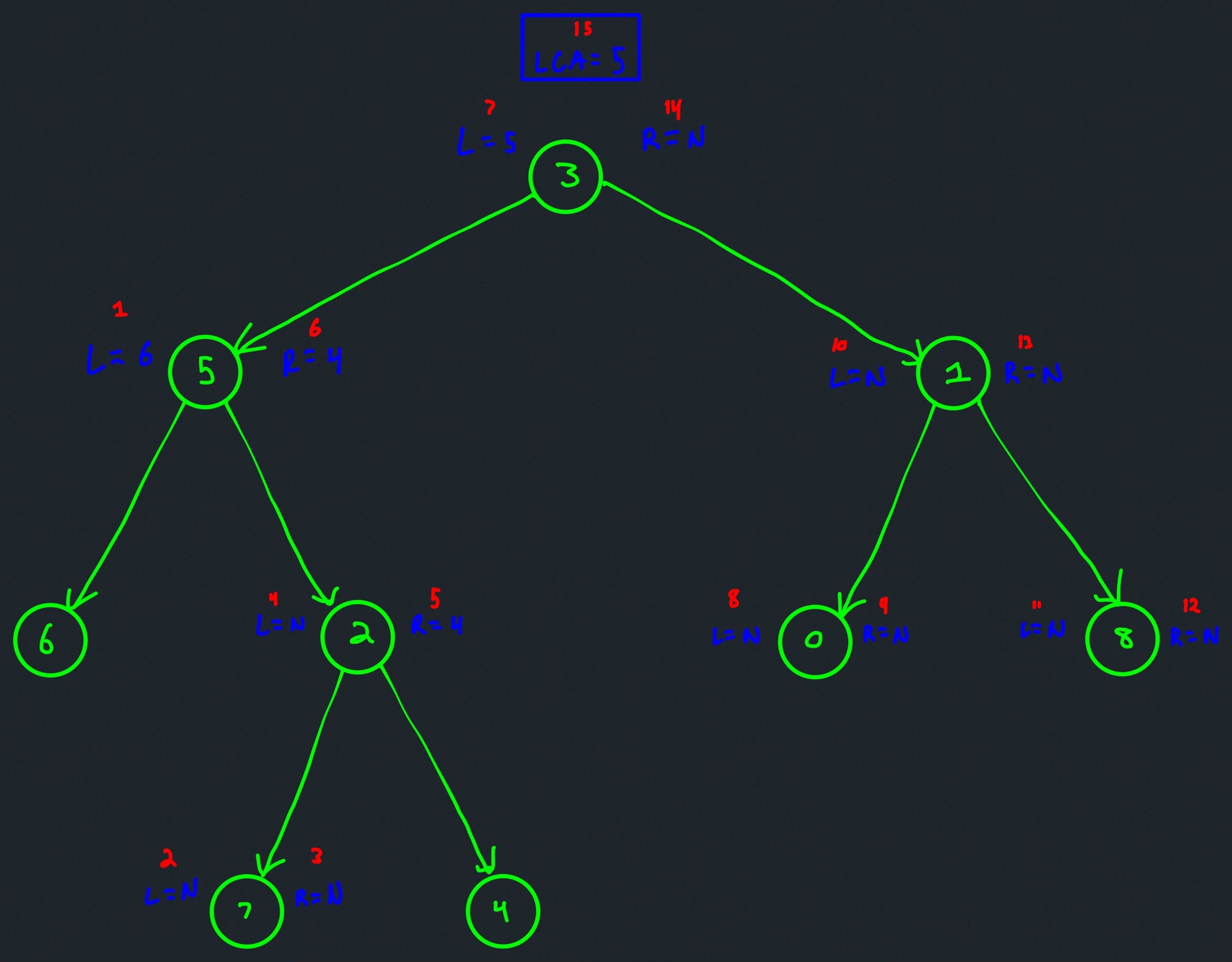

- Induction (solve subtrees recursively, aggregate results at root)

- Traverse-and-accumulate (visit nodes and accumulate information in nonlocal variables)

- Combining templates: induction and traverse-and-accumulate

- Two pointers

- Miscellaneous

Backtracking

Remarks

TBD

def fn(curr, OTHER_ARGUMENTS...):

if (BASE_CASE):

# modify the answer

return

ans = 0

for (ITERATE_OVER_INPUT):

# modify the current state

ans += fn(curr, OTHER_ARGUMENTS...)

# undo the modification of the current state

return ans

Examples

LC 46. Permutations (✓) ★★

Given an array nums of distinct integers, return all the possible permutations. You can return the answer in any order.

class Solution:

def permute(self, nums: List[int]) -> List[List[int]]:

def backtrack(curr):

if len(curr) == len(nums):

permutations.append(curr[:]) # note that we append a _copy_ of curr

return

for num in nums:

if num not in curr:

curr.append(num)

backtrack(curr)

curr.pop()

permutations = []

backtrack([])

return permutations

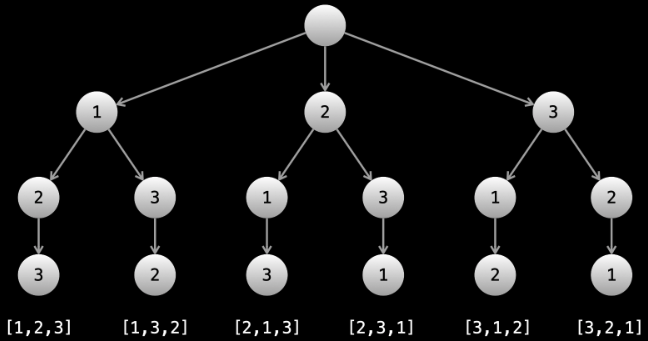

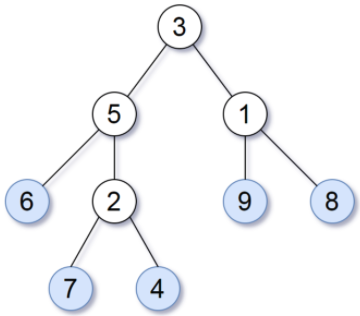

What actually is a permutation of nums? It's essentially all possible orderings of the elements of nums such that no element is duplicated. A backtracking strategy to generate all permutations sounds promising — what would the base case be? It would be when the current permutation being generated, say curr, has the same length as the input array nums: curr.length == nums.length (of course, this assumes we've done our due diligence and have prevented duplicates from being added to curr). The base case of curr.length == nums.length means we have completed the process of generating a permutation and we cannot go any further; specifically, if we look at the process of generating permutations as a tree, then completing the generation of a permutation means we have reached a leaf node, as illustrated in the following image for the input nums = [1, 2, 3]:

Building all permutations for this problem, where the input is an array of numbers, means we need all elements to make an appearance at the first index, all other elements to make an appearance at the second index, and so on. Hence, we should loop over all elements of nums for each call to our backtrack function, where we should always check to see if a number is already in curr before adding it to curr. Each call to backtrack is like visiting a node in the tree of candidates being generated. The leaves are the base cases/answers to the problem.

For the solution given above, if we simply add print(curr) after the line curr.append(num), then we can very clearly see how each call to backtrack is like visiting a node in the tree (it's like performing a DFS on an imaginary tree):

[1]

[1, 2]

[1, 2, 3] # complete permutation (leaf node)

[1, 3]

[1, 3, 2] # complete permutation (leaf node)

[2]

[2, 1]

[2, 1, 3] # complete permutation (leaf node)

[2, 3]

[2, 3, 1] # complete permutation (leaf node)

[3]

[3, 1]

[3, 1, 2] # complete permutation (leaf node)

[3, 2]

[3, 2, 1] # complete permutation (leaf node)

The entire list of complete permutations is then returned as the answer:

[[1, 2, 3], [1, 3, 2], [2, 1, 3], [2, 3, 1], [3, 1, 2], [3, 2, 1]]

The time complexity above effectively amounts to roughly , because we iterate through all of nums for each call to backtrack, and membership to curr is a linear cost when curr is an array, and then we're guaranteed to make calls to backtrack, and each call to backtrack then results in calls, and so forth. If we wanted to make a micro-optimization, then we could introduce a hash set to make the membership checks on curr instead of , but this change pales in comparison to the factorial cost of calling backtrack so many times:

class Solution:

def permute(self, nums: List[int]) -> List[List[int]]:

def backtrack(curr):

if len(curr) == len(nums):

permutations.append(curr[:])

return

for num in nums:

if num not in lookup:

curr.append(num)

lookup.add(num)

backtrack(curr)

curr.pop()

lookup.remove(num)

lookup = set()

permutations = []

backtrack([])

return permutations

LC 78. Subsets (✓) ★★

Given an integer array nums of unique elements, return all possible subsets (the power set). The solution set must not contain duplicate subsets. Return the solution in any order.

class Solution:

def subsets(self, nums: List[int]) -> List[List[int]]:

def backtrack(curr, num_idx):

subs.append(curr[:])

if num_idx == len(nums): # note that this base case is implied

return # since `backtrack` is only called in the

# for loop when `num_idx < len(nums)`

for i in range(num_idx, len(nums)):

curr.append(nums[i])

backtrack(curr, i + 1)

curr.pop()

subs = []

backtrack([], 0)

return subs

This problem is quite similar to LC 46. Permutations but with some very notable differences, namely container length and element order:

- Length: A subset can have any length from

0throughn(wherenis the size of the input array of distinct integers), inclusive, but a permutation has a fixed length ofn. - Order: The containers

[1, 2, 3]and[3, 2, 1]are considered to be different permutations but the same subset.

If we have a problem where containers like those above are considered to be duplicates and we do not want to consider duplicates (e.g., such as this problem concerned with finding subsets), then a common "trick" is to add a rule where each call of the backtrack function allows us to only consider elements that come after the previously processed element:

This is a very common method of avoiding duplicates in backtracking problems — have an integer argument that represents a starting point for iteration at each function call.

For example, in this problem, we start with the root being the empty container, []:

[]

With nums = [1, 2, 3], we can clearly consider each element as the beginning of its own subset:

[ ]

/ | \

[1] [2] [3]

Now what? Remember that calling backtrack is like moving to another node; hence, to respect the strategy remarked on above, when we move to another node, that node should only involve elements that come after the one we have just processed. This means the tree of possibilities above should end up looking like the following:

[ ] # level subsets: []

/ | \

[1] [2] [3] # level subsets: [1], [2], [3]

/ \ |

[2] [3] [3] # level subsets: [1,2], [1,3], [2,3]

|

[3] # level subsets: [1,2,3]

The actual order of subset generation from our solution code is not hard to anticipate in light of the strategy we've been discussing, where, again, we're basically doing a DFS on an imaginary tree, and once we hit the last indexed element (i.e., when index == len(nums)) we move back up the tree from child to parent:

[

[],

[1],

[1,2],

[1,2,3],

[1,3],

[2]

[2,3]

[3]

]

The order conjectured above is confirmed by the return value of our solution when nums = [1, 2, 3]:

[[], [1], [1, 2], [1, 2, 3], [1, 3], [2], [2, 3], [3]]

The process becomes even clearer if we add the print statement print(subs) after subs.append(curr[:]) as well as the print statement print('COMPLETED') after if num_idx == len(nums):. Making these modifications and running the solution code again on the input nums = [1,2,3] results in the following being printed to standard output:

[[]]

[[], [1]]

[[], [1], [1, 2]]

[[], [1], [1, 2], [1, 2, 3]]

COMPLETED

[[], [1], [1, 2], [1, 2, 3], [1, 3]]

COMPLETED

[[], [1], [1, 2], [1, 2, 3], [1, 3], [2]]

[[], [1], [1, 2], [1, 2, 3], [1, 3], [2], [2, 3]]

COMPLETED

[[], [1], [1, 2], [1, 2, 3], [1, 3], [2], [2, 3], [3]]

COMPLETED

[[], [1], [1, 2], [1, 2, 3], [1, 3], [2], [2, 3], [3]]

Note that the text 'COMPLETED' is only ever printed after an element/subset has been added to subs that ends with 3, which corresponds to the leaves of the tree shown earlier.

To summarize, when generating permutations, we had a length requirement, where we needed to use all of the elements in the input; hence, we only considered leaf nodes as part of the actual returned answer. With subsets, however, there is no length requirement; thus, every node should be in the returned answer, including the root node, which is why the very first line of the backtrack function is to add a copy of curr to the returned answer subs.

LC 77. Combinations (✓) ★★

Given two integers n and k, return all possible combinations of k numbers out of 1 ... n. You may return the answer in any order.

class Solution:

def combine(self, n: int, k: int) -> List[List[int]]:

def backtrack(curr, start_val):

if len(curr) == k:

combinations.append(curr[:])

return

for i in range(start_val, n + 1):

curr.append(i)

backtrack(curr, i + 1)

curr.pop()

combinations = []

backtrack([], 1)

return combinations

It can be very helpful to sketch out a tree of possibilities if we suspect backtracking may be an effective strategy for coming up with a solution (just like sketching out things for general tree problems, linked list problems, etc.).

The root of our tree would be [], the empty list. Unlike in LC 78. Subsets, there is an explicit length requirement in terms of the elements that can be added to the container we must ultimately return; hence, not all nodes in the tree of possibilities will represent entities that should be added to our answer array.

What is clear is that each entry added to the list we must return must have a length of k, and it must contain numbers from the list [1, ..., n], inclusive, where no number in the k-length list is duplicated. This means we should consider combining some of the strategy points we used in both LC 46. Permutations and LC 78. Subsets, namely using a length requirement as part of the base case as well as preventing duplicates from being considered, respectively.

The thinking goes something like the following: start with the empty list, [], as the root of the tree:

[]

Each k-length list can have a number from [1, ..., n], inclusive; thus, the root should have n children, namely nodes with values from 1 through n (the example below uses the values of n = 4, k = 2 from the first example on LeetCode):

[ ]

[1] [2] [3] [4]

Now what? A complete solution will be generated only when the path has length k, but each path right now has a length of 1 (but k = 2 for this example). To keep generating paths (i.e., possible solutions), we need to avoid considering duplicates, which means for each node value i, we only subsequently consider node values [i + 1, ..., n], which results in our overall tree looking like the following:

[ ]

[1] [2] [3] [4]

[2] [3] [4] [3] [4] [4]

Since the input specifies k = 2, the tree above suffices to report the following as the combinations list:

[

[1,2],

[1,3],

[1,4],

[2,3],

[2,4],

[3,4]

]

LC 797. All Paths From Source to Target (✓) ★

Given a directed acyclic graph (DAG) of n nodes labeled from 0 to n - 1, find all possible paths from node 0 to node n - 1, and return them in any order.

The graph is given as follows: graph[i] is a list of all nodes you can visit from node i (i.e., there is a directed edge from node i to node graph[i][j]).

class Solution:

def allPathsSourceTarget(self, graph: List[List[int]]) -> List[List[int]]:

def backtrack(curr_path, node):

if node == len(graph) - 1:

paths.append(curr_path[:])

return

for neighbor in graph[node]:

curr_path.append(neighbor)

backtrack(curr_path, neighbor)

curr_path.pop()

paths = []

backtrack([0], 0)

return paths

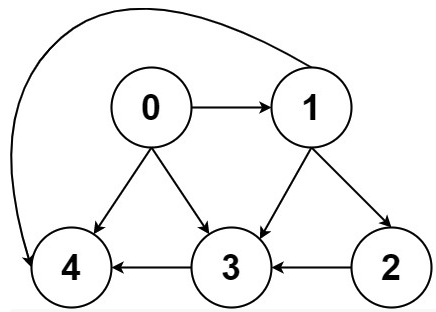

This is a great backtracking problem because backtracking is not necessarily the obvious first strategy of attack. Even if we do think of backtracking, how exactly should we proceed? This becomes much clearer (again!) once we start to sketch out the tree of possibilities. What would the root of our tree be? It has to be node 0 since our goal is to find all paths from node 0 to node n - 1. This also suggests something about our base case: we should terminate path generation whenever node n - 1 is reached. We don't have to worry about cycles or anything like that since we're told the graph is a DAG. Additionally, the graph is already provided as an adjacency list which makes traversing each node's neighbors quite easy.

So what's the strategy? Let's start with our root node (which needs to be part of every solution):

0

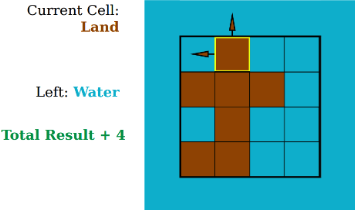

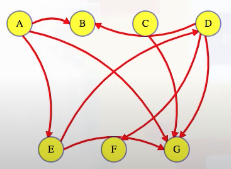

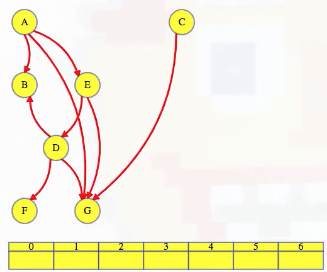

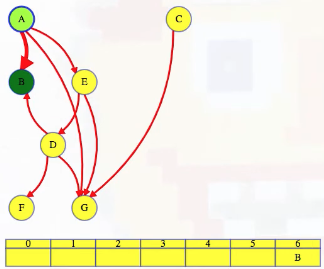

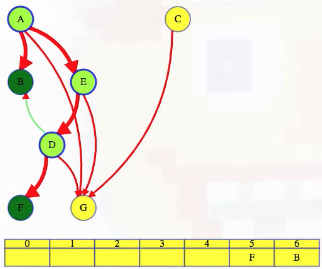

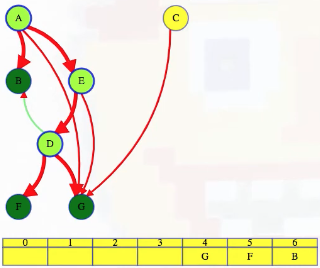

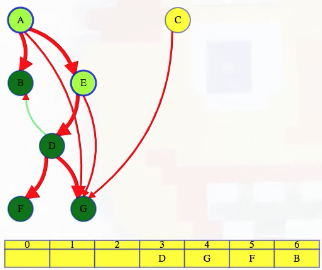

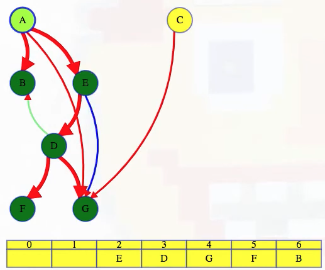

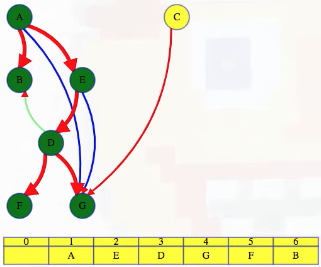

Now what? Each neighbor of node 0 needs to be considered (we're trying to get to node n - 1 from node 0 in whatever way is possible, which means exploring all possible paths). Let's use the input of the second example on LeetCode for a concrete illustration: graph = [[4,3,1],[3,2,4],[3],[4],[]]. This graphs looks like the following:

As stated above, from 0 we need to consider each of its neighbors:

0

/ | \

1 3 4

The tree above makes it clear [0,4] will be one possible path, but what are the other possible paths? It looks like leaf nodes will be the complete solutions that need to be added to our answer that we ultimately return. For each node that is not 4 (i.e., n - 1 == 4 in this case), we need to consider each possible neighbor (this will not be endless because we're told the graph is a DAG). Considering the neighbors for each node means our tree of possibilities ultimately ends up looking as follows:

0

/ | \

1 3 4

/ | \ |

2 3 4 4

| |

3 4

|

4

Hence, the set of possible paths is as expected:

[[0,4],[0,3,4],[0,1,3,4],[0,1,2,3,4],[0,1,4]]

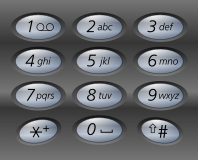

LC 17. Letter Combinations of a Phone Number (✓) ★★

Given a string containing digits from 2-9 inclusive, return all possible letter combinations that the number could represent. Return the answer in any order.

A mapping of digit to letters (just like on the telephone buttons) is given below. Note that 1 does not map to any letters.

class Solution:

def letterCombinations(self, digits: str) -> List[str]:

if not digits:

return []

keypad = {

'2': 'abc',

'3': 'def',

'4': 'ghi',

'5': 'jkl',

'6': 'mno',

'7': 'pqrs',

'8': 'tuv',

'9': 'wxyz'

}

def backtrack(curr, start_idx):

if len(curr) == len(digits):

combinations.append("".join(curr))

return

for i in range(start_idx, len(digits)):

digit = digits[i]

for letter in keypad[digit]:

curr.append(letter)

backtrack(curr, i + 1)

curr.pop()

combinations = []

backtrack([], 0)

return combinations

As with most other backtracking problems, it helps if we start by sketching out the tree of possibilities, where we can imagine the root being an empty string:

''

Now let's consider the input digits = "23", the input for the first example on LeetCode. The desired output is ["ad","ae","af","bd","be","bf","cd","ce","cf"]. How can this be achieved? The first digit is 2, which means 'a', 'b', and 'c' are valid starting letters for combinations:

' '

/ | \

'a' 'b' 'c'

We do not want to add duplicates of these letters for subsequent possible combinations but instead consider the potential letters arising from the next digit (i.e., we want to prevent processing duplicates by only ever processing digits after the current digit). The next digit in this case is 3 which corresponds to possible letters 'd', 'e', and 'f'. Our tree now looks like the following:

' '

/ | \

'a' 'b' 'c'

/ | \ / | \ / | \

'd' 'e' 'f' 'd' 'e' 'f' 'd' 'e' 'f'

The tree of possibilities above makes it clear the letter combinations we should return are as follows, as expected:

[

'ad',

'ae',

'af',

'bd',

'be',

'bf',

'cd',

'ce',

'cf',

]

LC 39. Combination Sum (✓) ★

Given an array of distinct integers candidates and a target integer target, return a list of all unique combinations of candidates where the chosen numbers sum to target. You may return the combinations in any order.

The same number may be chosen from candidates an unlimited number of times. Two combinations are unique if the frequency of at least one of the chosen numbers is different.

It is guaranteed that the number of unique combinations that sum up to target is less than 150 combinations for the given input.

class Solution:

def combinationSum(self, candidates: List[int], target: int) -> List[List[int]]:

def backtrack(path, path_sum, next_path_node_idx):

if path_sum == target:

paths.append(path[:])

return

for i in range(next_path_node_idx, len(candidates)):

candidate = candidates[i]

if path_sum + candidate <= target:

path.append(candidate)

backtrack(path, path_sum + candidate, i)

path.pop()

paths = []

backtrack([], 0, 0)

return paths

This problem is similar to LC 77. Combinations, but now we are taksed with generating all combinations of candidates such that each candidate number is allowed however many times we want, but the sum of the numbers for each combination/mixture of candidates has to equal the given target.

Start by drawing a tree!

[]

Each number in candidates may be part of a path sum we want to return, where the sum of the elements in the path equates to target. Let's use the input provided in the first example on LeetCode: candidates = [2,3,6,7], target = 7:

[ ]

/ / \ \

2 3 6 7

The problem description indicates any number may be reused however many times we desire, but we still don't want to generate duplicates such as [2,2,3], [2,3,2], etc. How can we avoid doing this? We can use the same strategy that many other backtracking problems use (i.e., ensure we only begin processing elements that are either the current element itself or elements that come after the current element):

[ ]

/ / \ \

2 3 6 7

/ / \ \ /|\ / \ |

2 3 6 7 3 6 7 6 7 7

..................................

When do we stop the process of generating paths? Since all possible values in candidates are positive, this necessarily means a path is no longer valid if its path sum exceeds the given target value.

Note that the following lines in the solution above are important:

# ...

for i in range(next_path_node_idx, len(candidates)):

candidate = candidates[i]

if path_sum + candidate <= target:

path.append(candidate)

# ...

It might be tempting to modify path_sum with the candidate value before entering the if block, but this would be a mistake. Why? Because the list of candidates is not necessarily ordered; hence, if we made the update path_sum += candidate and the new path_sum exceeded target, then we would no longer consider that path, but this would also prevent us from exploring other branches using candidates of a possibly lesser value where path_sum + candidate did not exceed target.

LC 79. Word Search (✓) ★★★

Given an m x n board and a word, find if the word exists in the grid.

The word can be constructed from letters of sequentially adjacent cells, where "adjacent" cells are horizontally or vertically neighboring. The same letter cell may not be used more than once.

class Solution:

def exist(self, board: List[List[str]], word: str) -> bool:

def valid(row, col):

return 0 <= row < m and 0 <= col < n

def backtrack(row, col, word_idx, seen):

if word_idx == len(word):

return True

for dr, dc in dirs:

next_row, next_col = row + dr, col + dc

if valid(next_row, next_col) and (next_row, next_col) not in seen:

next_char = board[next_row][next_col]

if next_char == word[word_idx]:

seen.add((next_row, next_col))

if backtrack(next_row, next_col, word_idx + 1, seen):

return True

seen.remove((next_row, next_col))

return False

m = len(board)

n = len(board[0])

dirs = [(1,0),(-1,0),(0,1),(0,-1)]

for row in range(m):

for col in range(n):

if board[row][col] == word[0] and backtrack(row, col, 1, {(row, col)}):

return True

return False

This problem has a very DFS feel to it, but what makes this a backtracking problem and not a strict DFS problem is because we may visit a square multiple times on the same initial function call. For example, suppose we're looking for the word CAMERA in the following grid:

CAM

ARE

MPS

We may start exploring as follows (each | represents the path taken):

C A M

|

A R E

|

M P S

The M-letter above does not have E as a neighbor so we remove the M from consideration and move back up to A, which also does not have an M-neighbor except the one we just visited, which we know leads to nothing. So we remove A as well and we're back at C, and we note C does have another A-neighbor it can visit. And we end up seeing the following as a valid path:

C -> A -> M

|

A <- R <- E

M P S

This path visits the A under C again in order to come up with a valid path. Thus, while we may not be allowed to use a square more than once for the answer, there are possibly multiple ways to use a square to form different candidates, as illustrated above.

We should still use a set seen like we would normally do with DFS solutions so as to avoid using the same letter in the same path. Unlike in DFS solutions, however, we will remove from the set seen when backtracking and it's been determined that a path solution is no longer possible — we should only ever traverse an edge in the graph if we know that the resultant path could lead to an answer. Thus, we can also pass an index variable word_idx that indicates we are currently looking for word[word_idx], and then only move to (next_row, next_col) if word[word_idx] is the correct letter.

Since the answer could start from any square whose letter matches word[0], we need to systematically start our backtracking approach from any square with word[0] as its letter. If we exhaust all such squares and never find word, then we should return false. Of course, if we do find word in the midst of one of our backtracking approaches, then we return true immediately.

LC 52. N-Queens II (✓) ★★★

The n-queens puzzle is the problem of placing n queens on an n x n chessboard such that no two queens attack each other.

Given an integer n, return the number of distinct solutions to the n-queens puzzle.

class Solution:

def totalNQueens(self, n: int) -> int:

def backtrack(row, cols, diagonals, anti_diagonals):

# base case: n queens have been placed

if row == n:

return 1

solution = 0

for col in range(n):

diagonal = row - col

anti_diagonal = row + col

# queen is not placeable, move to considering next column

if (col in cols or diagonal in diagonals or anti_diagonal in anti_diagonals):

continue

# add the queen to the board

cols.add(col)

diagonals.add(diagonal)

anti_diagonals.add(anti_diagonal)

# move to next row with the updated board state

solution += backtrack(row + 1, cols, diagonals, anti_diagonals)

# remove the queen from the board since we have

# explored all valid paths using the above backtrack function call

cols.remove(col)

diagonals.remove(diagonal)

anti_diagonals.remove(anti_diagonal)

return solution

return backtrack(0, set(), set(), set())

This problem is difficult. And it's often used to explain backtracking at various learning levels (e.g., university and college classes). The solution to this problem is informative, especially in regards to how we might want to go about modeling backtracking solutions to other problems.

Whenver we make a call to backtrack, we're doing so by describing the state of whatever it is that is important for consideration in solving the problem at hand. For some problems, maybe that state involves a string and the number of used left and right parentheses (e.g., LC 22. Generate Parentheses), maybe the state involves the row and column of a grid under consideration along with the cells already seen and the index of a word that represents a character for which we are searching (e.g., LC 79. Word Search). And maybe, as in this problem, the state needs to involve a chessboard!

What state should we consider tracking? We need to somehow keep track of rows and columns in some way. The trickier part about this problem involves the diagonals. Even though we have a board in this problem, this is not like a graph problem where we need to conduct a DFS or BFS — fundamentally, our job at each step is to place a queen on the board so that it is not under attack. This is the backtracking part where we narrow down the range of possibilities we consider — the brute force approach would involve generating all board possibilities with queens and then narrow down which solutions actually work, but that would involve placing queens on the board in positions that are being attacked, which means the rest of the generation of possibilities is useless. We want to place queens in positions where they are not being attacked and where whatever subsequent positions we consider can actually lead to a solution. Only once we find a solution do we start to undo queen placement and try other queen placements, but the key is that every placement must be one that could potentially lead to success.

With everything in mind above, how should we proceed? First, it's helpful to just think about how queens move on a chessboard: unlimited moves vertically, horizontally, diagonally (top left to bottom right), and anti-diagonally (top right to bottom left). If we are given n queens on an n x n chessboard, then it's clear that, at the end of the board configuration, each row must have a queen and each column must have a queen. The trickiest part is managing the diagonals effectively.

But we can start by working with the insight above about rows and columns. Each time backtrack is called should result in the placement of a queen on a new row; that is, we'll pass row as an argument to backtrack, and when we have finally reached row == n (i.e., rows 0 through n - 1 have been filled with queens), then we will know we have found a valid solution. Finding a workable position on any given row means processing all column positions on that row until we have found a position that is not under attack.

What about columns? As noted above, for each row being processed, we will iterate through all row positions (i.e., across all columns) until we find a column position that is not under attack. The row we are processing is definitely not under attack due to how we're processing rows, but how do we know whether or not each column is under attack? If another queen is on any column we're considering for a current row, then that column position is invalid because that other queen's line of attack runs through the current position we're considering. We need to consider the next column position. To keep track of which columns are already occupied by queens (i.e., which columns would be considered to be under attack when we're trying to add a new queen), whenever we add a queen to the board, we should add the column position of the queen to a set cols that we can use when we're trying to add subsequent queens.

Cool! We consider rows sequentially so it's guaranteed each row we're considering is not under attack. We have a set cols that shows which columns are occupied. It seems like we need a similar "strategy" for diagonals and anti-diagonals that we currently have for columns; that is, whenever we add a queen to the board, we should also note which diagonal and anti-diagonal just became occupied. But how do we effectively apply a singular label or marker to a diagonal or anti-diagonal that spans multiple positions on the board?

This seems really tricky to do at first until we make note of a brilliant observation concerning diagonal and anti-diagonal movement (R and C below represent row and column position, respectively):

-

diagonal movement (top left to bottom right): If we start at any cell

(R, C)and move one position up, then we will be at cell(R - 1, C - 1). If we move one position down, then we will be at(R + 1, C + 1). In general, if we letdranddcrepresent the change in row or column value, respectively, then we will find ourselves always moving from cell(R, C)to(R + dr, C + dc)on a given diagonal. Importantly, note thatdr == dcsince a change in any direction effects each value in the same way (e.g., moving 4 spaces up meansdr == dc == -4since the row and column values both decrease by 4).But how does any of this help in service of creating a unique label for a diagonal? The insight lies in how the row and column values relate to each other. Let

(R, C)be the coordinates of a cell on a diagonal, and let's label the cell as having valueR - C. Then what happens whenever we move from cell(R, C)to cell(R + dr, C + dc)? Sincedr == dc, we have(R + dr) - (C + dc) = R + dr - C - dr = R - C; that is, the label for any cell on a diagonal is the same:

Hence, we should label visited diagonals by adding

row - colvalues to adiagonalsset. -

anti-diagonal movement (top right to bottom left): We can make a similar argument to the one above about effectively labeling anti-diagonals. Let

(R, C)represent any given cell on an anti-diagonal. Then moving up a cell would take us to cell(R - 1, C + 1)while moving down a cell would take us to cell(R + 1, C - 1); that is, the row and column values of a cell change at the same rate in an inversely proportional manner. We essentially havedr == dcagain, but there needs to be a sign difference in how we represent a movement from(R, C)to another cell:(R + dr, C - dc). Then(R + dr) + (C - dc) = R + dr + C - dr = R + C:

Hence, we should label visited anti-diagonals by adding

row + colvalues to ananti-diagonalsset.

LC 22. Generate Parentheses (✓) ★★★

Given n pairs of parentheses, write a function to generate all combinations of well-formed parentheses.

class Solution:

def generateParenthesis(self, n: int) -> List[str]:

def backtrack(curr_str, left_count, right_count):

if len(curr_str) == 2 * n:

valid_parens.append("".join(curr_str))

return

if left_count < n:

curr_str.append('(')

backtrack(curr_str, left_count + 1, right_count)

curr_str.pop()

if left_count > right_count:

curr_str.append(')')

backtrack(curr_str, left_count, right_count + 1)

curr_str.pop()

valid_parens = []

backtrack([], 0, 0)

return valid_parens

The editorial for this solution on LeetCode is quite good. The key insights (with the first being somewhat minor but the second one being critical):

- We should append the string we're building whenever its length is

2nbecause the string we're building, when taking a backtracking approach anyway, must have the potential to be valid and a complete solution at every point. Hence, once/if the candidate string reaches a length of2n, then we know the string satisfies the constraints of the problem and is a complete solution. - As a starting point, we can add as many left-parentheses as we want without fear of producing an invalid string (so long as the number of left parentheses we add doesn't exceed

n). We then need to start adding right parentheses. But how can we add right parentheses in general? We should only ever consider adding a right parenthesis when the total number of left parentheses exceeds the number of right parentheses. That way when we add a right parenthesis there's a chance the subsequent string could be a well-formed parenthetical string of length2n, as desired.

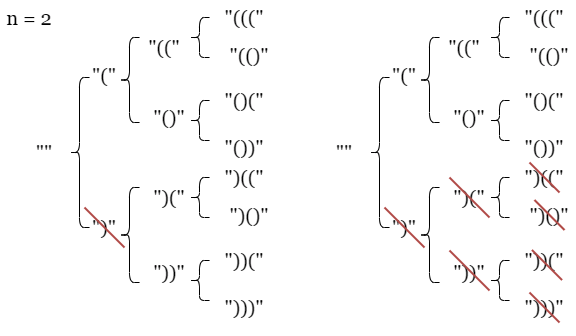

The LeetCode editorial linked above shows how sketching out a tree of possibilities is very useful for this problem, where the following illustration is for the beginning of the case where n = 2:

We can start to see how the logic of the solution above makes sense. For the sake of completeness and concreteness, consider the input of the first example on LeetCode, n = 3, and the corresponding desired output:

["((()))","(()())","(())()","()(())","()()()"]

We can make our own diagram that shows how each solution is built (the x indicates that potential solution path is longer pursued since further work on that path cannot possibly lead to a correct answer):

_____________________________________________________"

/ \

_________________________________________________(________________________________________________ x

/ \

_______________________((_______________________ _______________________()

/ \ / \

(((___ _________________(()________________ _________________()(________________ x

/ \ / \ / \

x ((()____ (()(____ _________(()) ()((____ _________()()

/ \ / \ / \ / \ / \

x ((())__ x (()()__ (())(__ x x ()(()__ ()()(__ x

/ \ / \ / \ / \ / \

x ((())) x (()()) x (())() x ()(()) x ()()()

As a note of reference, the tree above was generated using the binarytree package:

from binarytree import build2

my_tree = build2([

'"',

"(", 'x',

"((", "()", None, None,

"(((", "(()", "()(", "x",

"x", "((()", "(()(", "(())", "()((", "()()", None, None,

None, None, "x", "((())", "x", "(()()", "(())(", "x", "x", "()(()", "()()(", "x",

None, None, "x", "((()))", None, None, "x", "(()())", "x", "(())()", None, None, None, None, "x", "()(())", "x", "()()()"

])

root = my_tree.levelorder[0]

print(root)

LC 967. Numbers With Same Consecutive Differences (✓) ★★

Return all non-negative integers of length n such that the absolute difference between every two consecutive digits is k.

Note that every number in the answer must not have leading zeros. For example, 01 has one leading zero and is invalid.

You may return the answer in any order.

class Solution:

def numsSameConsecDiff(self, n: int, k: int) -> List[int]:

def digits_to_int(digits):

ans = digits[0]

for i in range(1, len(digits)):

ans = (ans * 10) + digits[i]

return ans

def backtrack(digit_arr):

if len(digit_arr) == n:

res.append(digits_to_int(digit_arr))

return

for num in range(10):

if abs(digit_arr[-1] - num) == k:

digit_arr.append(num)

backtrack(digit_arr)

digit_arr.pop()

res = []

for start_digit in range(1, 10):

backtrack([start_digit])

return res

The impossibility of leading zeros can complicate things if we're not careful. We still need to consider the digit 0 as part of potential constraint-satisfying integers. An easy way to handle this is to completely prevent 0 from being a possible leading digit at the outset. Execute the backtrack function for digit arrays that begin with each number 1 through 9, inclusive, and append complete solutions to an overall results array, res, that we ultimately return. It's easiest to manage the digits if we build each integer using a digits array and then return the actual integer once the digits array represents a constraint-satisfying integer.

LC 216. Combination Sum III (✓) ★

Find all valid combinations of k numbers that sum up to n such that the following conditions are true:

- Only numbers

1through9are used. - Each number is used at most once.

Return a list of all possible valid combinations. The list must not contain the same combination twice, and the combinations may be returned in any order.

class Solution:

def combinationSum3(self, k: int, n: int) -> List[List[int]]:

def backtrack(nums_arr, curr_sum, next_num):

if len(nums_arr) == k and curr_sum == n:

res.append(nums_arr[:])

return

for num in range(next_num, 10):

if curr_sum + num <= n:

nums_arr.append(num)

backtrack(nums_arr, curr_sum + num, num + 1)

nums_arr.pop()

res = []

backtrack([], 0, 1)

return res

The valid combinations can only involve positive integers, which means the sum we're building can only get bigger. Hence, we should only consider as possibilities combinations of integers for which the sum does not exceed n. Also, the numbers used as part of the combination need to be unique. Which means whenever we start with a certain number we should only ever consider subsequent integer values.

Binary search

Array

Remarks

TBD

def binary_search(arr, target):

left = 0 # starting index of search range (inclusive)

right = len(arr) - 1 # ending index of search range (inclusive)

result = -1 # result of -1 indicates target has not been found yet

while left <= right: # continue search while range is valid

mid = left + (right - left) // 2 # prevent potential overflow

if arr[mid] < target: # target is in right half

left = mid + 1 # (move `left` to narrow search range to right half)

elif arr[mid] > target: # target is in left half

right = mid - 1 # (move `right` to narrow search range to left half)

else: # target found (i.e., arr[mid] == target)

result = mid # store index where target is found (early return, if desired)

right = mid - 1 # uncomment to find first occurrence by narrowing search range to left half

# left = mid + 1 # uncomment to find last occurrence by narrowing search range to right half

if result != -1:

return result # target was found; return its index

else:

return left # target was not found; return insertion point to maintain sorted order

# NOTES:

# only one of the following lines should be uncommented in the else clause: `right = mid - 1` and `left = mid + 1` should

# `right = mid - 1` uncommented and `left = mid + 1` commented out results in searching for first occurrence of target

# `left = mid + 1` uncommented and `right = mid - 1` commented out results in searching for last occurrence of target

Examples

LC 704. Binary Search

Given an array of integers nums which is sorted in ascending order, and an integer target, write a function to search target in nums. If target exists, then return its index. Otherwise, return -1.

class Solution:

def search(self, nums: List[int], target: int) -> int:

left = 0

right = len(nums) - 1

while left <= right:

mid = left + (right - left) // 2

if target < nums[mid]: # target in left half, move right boundary

right = mid - 1

elif target > nums[mid]: # target in right half, move left boundary

left = mid + 1

else:

return mid # target at current mid position, return

return -1

The code above is the template for a basic binary search. We're guaranteed that the numbers in nums are unique, which means target, if it exists, will be found and the first index at which it occurs (and only index) will be returned.

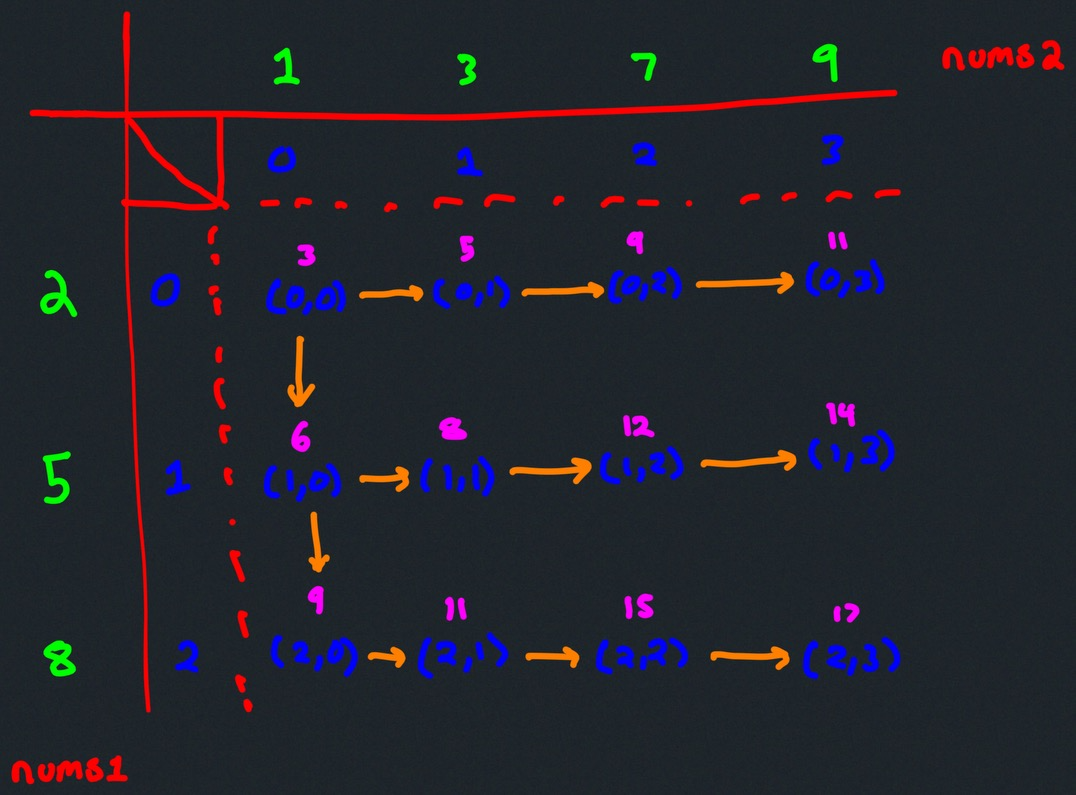

LC 74. Search a 2D Matrix

Write an efficient algorithm that searches for a value in an m x n matrix. This matrix has the following properties:

- Integers in each row are sorted from left to right.

- The first integer of each row is greater than the last integer of the previous row.

class Solution:

def searchMatrix(self, matrix: List[List[int]], target: int) -> bool:

m = len(matrix)

n = len(matrix[0])

left = 0

right = m * n - 1

while left <= right:

mid = left + (right - left) // 2

val = matrix[mid // n][mid % n]

if target < val:

right = mid - 1

elif target > val:

left = mid + 1

else:

return True

return False

This problem is very similar to the following classic binary search problem: LC 704. Binary Search. The main difference is that in this problem we basically need to view the 2D array matrix as virtually flattened into a 1D array with index values idx bounded by 0 <= idx <= m * n - 1. The key part of the solution above is, of course, the following line: val = matrix[mid // n][mid % n]. This lets us seamlessly find a cell value in the virtually flattened matrix by converting a given index value to the appropriate row and column in the original input matrix. We could make this more explicit by abstracting away the logic in the line above into its own function:

def index_to_cell_val(idx):

row = idx // n

col = idx % n

return matrix[row][col]

The idea is that we can binary search on the virtually flattened 1D array.

LC 35. Search Insert Position (✓)

Given a sorted array of distinct integers and a target value, return the index if the target is found. If not, return the index where it would be if it were inserted in order.

class Solution:

def searchInsert(self, nums: List[int], target: int) -> int:

left = 0

right = len(nums) - 1

while left <= right:

mid = left + (right - left) // 2

if target < nums[mid]:

right = mid - 1

elif target > nums[mid]:

left = mid + 1

else:

return mid

return left

Distinctness of the input integers in nums ensures returning mid will be the index of target if it's found; otherwise, we return left, which will be the index where we should insert target in order to keep a sorted array.

LC 633. Sum of Square Numbers★

Given a non-negative integer c, decide whether there're two integers a and b such that a2 + b2 = c.

class Solution:

def judgeSquareSum(self, c: int) -> bool:

def binary_search(left, right, target):

while left <= right:

b = left + (right - left) // 2

if target < b * b:

right = b - 1

elif target > b * b:

left = b + 1

else:

return True

return False

a = 0

while a * a <= c:

b_squared = c - a * a

if binary_search(0, b_squared, b_squared):

return True

a += 1

return False

Binary search can crop up in all sorts of unexpected places. This is one of them. The idea is that we iteratively search the space [0, c - a^2] for a value of b such that b^2 == c - a^2 that way a^2 + b^2 == c, as desired. The funkier part of the binary search solution is what the usual mid denotes in the binary search itself, namely the b-value we're looking for such that b^2 == target, where target == c - a^2. Hence, when we make adjustments to the left or right endpoints, we're actually comparing the target value against b * b where b takes the role of the normal mid value. If it's ever the case that the target is neither less than b * b nor greater than b * b, then we've found an integer b-value that satisfies the equation (and we've done so using binary search!).

LC 2300. Successful Pairs of Spells and Potions

You are given two positive integer arrays spells and potions, of length n and m, respectively, where spells[i] represents the strength of the ith spell and potions[j] represents the strength of the jth potion.

You are also given an integer success. A spell and potion pair is considered successful if the product of their strengths is at least success.

Return an integer array pairs of length n where pairs[i] is the number of potions that will form a successful pair with the ith spell.

class Solution:

def successfulPairs(self, spells: List[int], potions: List[int], success: int) -> List[int]:

def successful_potions(spell_strength):

# need spell * potion >= success (i.e., potion >= threshold)

threshold = success / spell_strength

left = 0

right = len(potions)

while left < right:

mid = left + (right - left) // 2

if threshold <= potions[mid]:

right = mid

else:

left = mid + 1

return len(potions) - left

potions.sort()

return [ successful_potions(spell) for spell in spells ]

For any potion to actually be successful, it must be greater than or equal to success / spell for any given spell. Since we process potions for each spell in spells, this suggests we should pre-process potions by sorting. Then we can conduct a binary search (where the target is equal to success / spell for a given spell) to determine the where the insertion point would need to be in order for a new potion to be successful. We want everything to the right of that point. Hence, if len(potions) is the length of the potions array and we determine that the leftmost insertion point should occur at i, then we want everything from [i, len(potions) - 1]; that is, (len(potions) - 1) - i + 1 == len(potions) - i, where the first + 1 reflects inclusivity of i. An example will make this clear.

Let's say we sort potions and have potions = [1, 2, 3, 4, 5], and success = 7. We have a spell with a strength of 3. To form a successful pair, we need a potion with a strength of at least 7 / 3 = 2.3333. If we do a binary search for this value on potions, we will find an insertion index of 2. Every potion on this index and to the right can form a successful pair. There are 3 indices in total (the potions with strength 3, 4, 5). In general, if there are m potions, the final index is m - 1. If the insertion index is i, then the range [i, m - 1] has a size of (m - 1) - i + 1 = m - i.

LC 2389. Longest Subsequence With Limited Sum (✓) ★

You are given an integer array nums of length n, and an integer array queries of length m.

Return an array answer of length m where answer[i] is the maximum size of a subsequence that you can take from nums such that the sum of its elements is less than or equal to queries[i].

A subsequence is an array that can be derived from another array by deleting some or no elements without changing the order of the remaining elements.

class Solution:

def answerQueries(self, nums: List[int], queries: List[int]) -> List[int]:

def binary_search(query):

left = 0

right = len(nums)

while left < right:

mid = left + (right - left) // 2

if query < nums[mid]:

right = mid

else:

left = mid + 1

return left

nums.sort()

for i in range(1, len(nums)):

nums[i] += nums[i - 1]

return [ binary_search(query) for query in queries ]

This problem invites us to dust off our knowledge of prefix sums — because that's really what we need to effectively answer this problem. We need to sort the input nums, create a prefix sum (either mutate the input directly, as above, or create a new array), and then conduct a binary search on the prefix sum where each time we try to find what would need to be the rightmost insertion point if we were to add query to the prefix sum array.

Why does this work. Suppose we had the prefix sum [0, 1, 3, 5, 5, 7, 9], and the query value we were given was 5. Where would we need to insert 5 in the prefix sum above to maintain sorted order so that 5 was as far right as possible? It would need to be at index i == 5 (right after the other two 5 values). This means the original numbers in nums responsible for the [0, 1, 3, 5, 5] part of the preifx sum can all be removed so that the sum is less than or equal to the query value 5. That is why the solution above works.

Solution space

Remarks

As noted in a LeetCode editorial on binary search on solution spaces, we need a few conditions to be met in order to effectively conduct our search:

- Possibility/condition/check/feasible function can execute in rougly time — we can quickly, in or better, verify if the task is possible for a given threshold value,

threshold; that is, we define a function,possible(threshold), that returns a boolean that indicates if the given task is possible or impossible when given the specificthresholdvalue. - Max/min characteristic when task is possible given the specific

thresholdvalue — if the task is possible for a numberthresholdand we are looking for

- a maximum, then it is also possible for all numbers less than

threshold. - a minimum, then it is also possible for all numbers greater than

threshold.

- Max/min characteristic when task is impossible given the specific

thresholdvalue — if the task is impossible for a numberthresholdand we are looking for

- a maximum, then it is also impossible for all numbers greater than

threshold. - a minimum, then it is also impossible for all numbers less than

threshold.

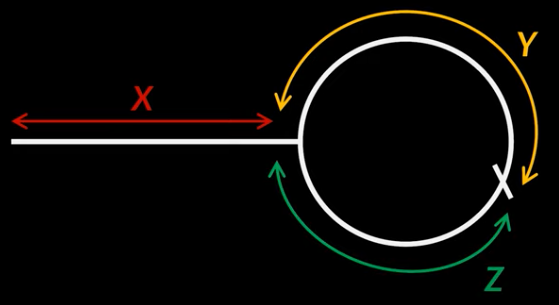

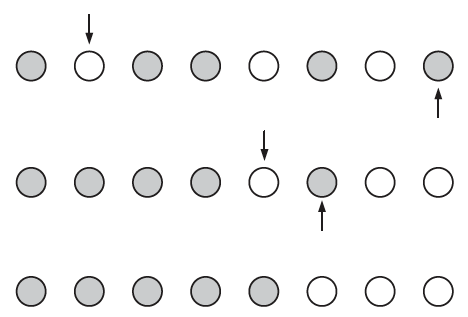

The above depictions can be somewhat difficult to envision at first so it can be helpful to draw out a very simple outline as if we're on a number line from 0 to infinity, left to right, as demonstrated below.

Looking for a maximum threshold:

Example use case (illegal parking): Maximize time spent parked illegally without getting a ticket. Under various conditions (e.g., parking enforcers, location, etc.), we can imagine this being possible for a certain amount of time before it becomes impossible. We'd like to maximize the POSSIBLE amount of time we do not have to worry about getting a ticket before it becomes IMPOSSIBLE to avoid getting a ticket:

-----------------------

| Possible | Impossible |

-----------------------

0 ^ ...inf

(threshold binary searched for)

As can be seen above, given a threshold amount of time, our task of going undetected when parked illegally is

- possible for all numbers less than or equal to

threshold - impossible for all numbers greater than

threshold

Looking for a minimum threshold:

Example use case (mandatory online trainings): Minimize time spent on a manadotry online training page before clicking to continue without arousing suspicion. Many online training requirements are modules that are "click-through" in nature, where an employee must complete the module but should not "click to continue" until a sufficient amount of time has elapsed to indicate the employee has possibly consumed all of the information on the page. The goal is to minimize the amount of time spent on any given page. We can imagine this being impossible for a certain amount of time before it becomes possible. We'd like to minimize the POSSIBLE amount of time we are required to be on any given training page where it is IMPOSSIBLE to avoid doing so until a certain amount of time has elapsed:

-----------------------

| Impossible | Possible |

-----------------------

0 ^ ...inf

(threshold binary searched for)

As can be seen above, given a threshold amount of time, our task of having to remain on a given training page before being allowed to continue making progress through the training is

- impossible for all numbers less than

threshold - possible for all numbers greater than or equal to

threshold

TAKEAWAY:

-

Minimum: When searching to minimize a value on a solution space, our goal is to find a value,

threshold, where the condition we're testing for is possible (i.e.,possible(threshold)returnstrue) andthresholdis minimized within the region of possibilities. Specifically, if we letlandrrepresent the smallest and largest possible solutions in the solution space, respectively, then we're essentially searching for thethresholdvalue, sayx, betweenlandrsuch thatpossible(x)returnstruebut any smaller value ofx, sayx - ε, results inpossible(x - ε)returningfalse. We can use our previous illustration to capture this:l x r

-----------------------

| Impossible | Possible |

----------------------- -

Maximum: When searching to maximize a value on a solution space, our goal is to find a value,

threshold, where the condition we're testing for is possible (i.e.,possible(threshold)returnstrue) andthresholdis maximized within the region of possibilities. Specifically, if we letlandrrepresent the smallest and largest possible solutions in the solution space, respectively, then we're essentially searching for thethresholdvalue, sayx, betweenlandrsuch thatpossible(x)returnstruebut any larger value ofx, sayx + ε, results inpossible(x + ε)returningfalse. We can use our previous illustration to capture this:l x r

-----------------------

| Possible | Impossible |

-----------------------

The template below makes everything discussed above feasible:

def binary_search_sol_space(arr):

def possible(threshold):

# this function is implemented depending on the problem

return BOOLEAN

left = MINIMUM_POSSIBLE_ANSWER # minimum possible value in solution space (inclusive)

right = MAXIMUM_POSSIBLE_ANSWER # maximum possible value in solution space (inclusive)

result = -1 # desired result (-1 to indicate no valid value found yet)

while left <= right: # continue search while range is valid

mid = left + (right - left) // 2

if possible(mid):

result = mid # mid satisfies condition; update result

right = mid - 1 # adjust right to find smaller valid value (minimization)

else:

left = mid + 1 # mid doesn't satisfy condition; search right half

# IMPORTANT: swap `right = mid - 1` and `left = mid + 1`

# if looking to maximize valid value (i.e., instead of minimize)

return result # return best value found satisfying condition

Above, left, right, result stand for l, r, x, respectively, in regards to the notation we used previously to visualize the solution space on which we are binary searching. A few things worth noting about the template above:

- Line

13: This is whereresultis updated. Note howresultis only updated oncepossible(mid)is true for somemidvalue; that is, if what we're looking to minimize or maximize is actually not possible, thenresultwill never be updated, and a value of-1will be returned to indicate no valid value was found. - Lines

14and16: Whether or not these lines should be swapped depends on if the problem at hand is a minimization (no swap) or maximization (swap) problem. Specificially, the template above, in its default state, is set up for minimization problems. Why? Because once a validmidvalue is found, we narrow the search space to the left withright = mid - 1, which corresponds to trying to find a smaller valid value (minimization). On the other hand, if we're trying to maximize the valid values we find, then we need to narrow the search space to the right withleft = mid + 1, which corresponds to trying to find a larger valid value (maximization).

Thankfully, the template above is quite similar to the template for binary searching on arrays, which means less effort needs to be devoted to memorization, and more time can be spent on understanding.

def binary_search_sol_space(arr):

def possible(threshold):

# this function is implemented depending on the problem

return BOOLEAN

left = MINIMUM_POSSIBLE_ANSWER # minimum possible value in solution space (inclusive)

right = MAXIMUM_POSSIBLE_ANSWER # maximum possible value in solution space (inclusive)

result = -1 # desired result (-1 to indicate no valid value found yet)

while left <= right: # continue search while range is valid

mid = left + (right - left) // 2

if possible(mid):

result = mid # mid satisfies condition; update result

right = mid - 1 # adjust right to find smaller valid value (minimization)

else:

left = mid + 1 # mid doesn't satisfy condition; search right half

# IMPORTANT: swap `right = mid - 1` and `left = mid + 1`

# if looking to maximize valid value (i.e., instead of minimize)

return result # return best value found satisfying condition

Examples

LC 875. Koko Eating Bananas (✓) ★

Koko loves to eat bananas. There are n piles of bananas, the ith pile has piles[i] bananas. The guards have gone and will come back in h hours.

Koko can decide her bananas-per-hour eating speed of k. Each hour, she chooses some pile of bananas and eats k bananas from that pile. If the pile has less than k bananas, she eats all of them instead and will not eat any more bananas during this hour.

Koko likes to eat slowly but still wants to finish eating all the bananas before the guards return.

Return the minimum integer k such that she can eat all the bananas within h hours.

class Solution:

def minEatingSpeed(self, piles: List[int], h: int) -> int:

def possible(speed):

hours_spent = 0

for banana in piles:

hours_spent += -(banana // -speed)

if hours_spent > h:

return False

return True

left = 1

right = max(piles)

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

The idea is for Koko to go as slowly as possible while still being able to eat all bananas within h hours. Our goal is to find the speed at which Koko can eat all bananas but as soon as we decrease the speed it becomes impossible (that will give us the minimized speed for which eating all bananas is possible).

Hence, we can binary search on the solution space where the solution space is comprised of speeds in the range [min_possible_speed, max_possible_speed], inclusive. What would make sense as a minimum possible speed? The speed needs to be an integer and it clearly can't be 0; hence, the minimum possible speed is 0 so we set left = 0. What about the maximum possible speed? Each pile can be consumed within a single hour if the speed is the size of the pile with the greatest number of bananas; hence, the maximum possible speed we should account for is max(piles) so we set right = max(piles).

All that is left to do now is to greedily search for the leftmost value in the solution space that satisfies the possible constraint.

LC 1631. Path With Minimum Effort (✓) ★★

You are a hiker preparing for an upcoming hike. You are given heights, a 2D array of size rows x columns, where heights[row][col] represents the height of cell (row, col). You are situated in the top-left cell, (0, 0), and you hope to travel to the bottom-right cell, (rows-1, columns-1) (i.e., 0-indexed). You can move up, down, left, or right, and you wish to find a route that requires the minimum effort.

A route's effort is the maximum absolute difference in heights between two consecutive cells of the route.

Return the minimum effort required to travel from the top-left cell to the bottom-right cell.

class Solution:

def minimumEffortPath(self, heights: List[List[int]]) -> int:

def valid(row, col):

return 0 <= row < m and 0 <= col < n

def possible(route_max_abs_diff):

seen = {(0,0)}

def dfs(row, col):

if row == m - 1 and col == n - 1:

return True

for dr, dc in dirs:

next_row, next_col = row + dr, col + dc

if valid(next_row, next_col) and (next_row, next_col) not in seen:

cell_val = heights[row][col]

adjacent_cell_val = heights[next_row][next_col]

if abs(adjacent_cell_val - cell_val) <= route_max_abs_diff:

seen.add((next_row, next_col))

if dfs(next_row, next_col):

return True

return False

return dfs(0, 0)

m = len(heights)

n = len(heights[0])

dirs = [(1,0),(-1,0),(0,1),(0,-1)]

left = 0

right = max([element for row in heights for element in row]) - 1

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

This is a great problem for a variety of reasons. It can be somewhat tricky at first though. The idea is that if we can find a path from the top left to the bottom right using a certain amount of effort, then we can certainly find a path that works for any greater amount of effort (i.e., effort + 1, effort + 2, etc.). But can we find a valid path using less effort? That's the real question.

If we let our solution space be the amount of effort required for a valid path, then we can binary search from the minimum possible amount of effort to the maximum possible amount of effort, inclusive. The goal is to find the effort amount for a valid path where any effort less than that would not result in a valid path.

What are the min/max effort bounds? The minimum possible effort is 0 because all of the numbers in the path could be the same. What about the maximum possible effort? That would be the max amount in the matrix minus the smallest amount:

max([element for row in heights for element in row]) - 1

We could also observe the constraint 1 <= heights[i][j] <= 10^6, which means either of the following options would be valid for the problem at hand:

# option 1

left = 0

right = max([element for row in heights for element in row]) - 1

# option 2

left = 0

right = 10 ** 6 - 1

The first option is obviously more costly in some respects, but the second option could result in a maximum boundary that is much larger than what we need. Since binary search is so fast, the second option is really not an issue though.

Finally, it should be noted that a stack-based DFS is also quite effective here to avoid the space overhead required by the call stack to deal with recursion:

class Solution:

def minimumEffortPath(self, heights: List[List[int]]) -> int:

def valid(row, col):

return 0 <= row < m and 0 <= col < n

def possible(route_max_abs_diff):

seen = {(0,0)}

def dfs(start_row, start_col):

stack = [(start_row, start_col)]

while stack:

row, col = stack.pop()

if row == m - 1 and col == n - 1:

return True

for dr, dc in dirs:

next_row, next_col = row + dr, col + dc

if valid(next_row, next_col) and (next_row, next_col) not in seen:

cell_val = heights[row][col]

next_cell_val = heights[next_row][next_col]

if abs(next_cell_val - cell_val) <= route_max_abs_diff:

seen.add((next_row, next_col))

stack.append((next_row, next_col))

return False

return dfs(0, 0)

m = len(heights)

n = len(heights[0])

dirs = [(1,0),(-1,0),(0,1),(0,-1)]

left = 0

right = max([element for row in heights for element in row]) - 1

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

LC 1870. Minimum Speed to Arrive on Time (✓) ★

You are given a floating-point number hour, representing the amount of time you have to reach the office. To commute to the office, you must take n trains in sequential order. You are also given an integer array dist of length n, where dist[i] describes the distance (in kilometers) of the ith train ride.

Each train can only depart at an integer hour, so you may need to wait in between each train ride.

- For example, if the

1st train ride takes1.5hours, you must wait for an additional0.5hours before you can depart on the2nd train ride at the 2 hour mark.

Return the minimum positive integer speed (in kilometers per hour) that all the trains must travel at for you to reach the office on time, or -1 if it is impossible to be on time.

Tests are generated such that the answer will not exceed 107 and hour will have at most two digits after the decimal point.

class Solution:

def minSpeedOnTime(self, dist: List[int], hour: float) -> int:

if len(dist) > -(hour // -1):

return -1

def possible(speed):

hours_spent = 0

for i in range(len(dist) - 1):

distance = dist[i]

hours_spent += -(distance // -speed)

if hours_spent > hour:

return False

hours_spent += dist[-1] / speed

return hours_spent <= hour

left = 1

right = 10 ** 7

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

This one is a bit wonky to reason about at first. In part because of how the time elapsed is evaluated. It's similar to LC 875. Koko Eating Bananas in that we always take the ceiling of time evaluations, but here the time spent on the last train is not rounded up (we rounded up the time spent on all piles for the Koko eating bananas problem).

When will it be impossible to reach the office on time? It's not when len(dist) > hour, as LeetCode's second example with input dist = [1,3,2], hour = 2.7 shows. However, it will be impossible if len(dist) > ceil(hour). The third example, with input dist = [1,3,2], hour = 1.9 illustrates this, where the earliest the third train cen depart is at the 2 hour mark.

The idea is to conduct a binary search on the range of speeds [min_speed_possible, max_speed_possible] for which we'll be able to make the trip on time (i.e., where the total number of hours spent is less than or equal to hour), where we want to minimize the speed required to make the trip on time. If we can make the trip on time for a given speed, then we can definitely make the trip on time if we increase the speed to speed + 1. We want to determine when making it on time is possible for speed but impossible for any valid speed value less than this. Binary search it is!

What's the minimum possible speed? We're told the speed reported must be a positive integer so we set left = 1. What about the maximum possible speed? We're told that the answer will not exceed 10 ** 7; hence, we set right = 10 ** 7.

LC 1283. Find the Smallest Divisor Given a Threshold (✓)

Given an array of integers nums and an integer threshold, we will choose a positive integer divisor, divide all the array by it, and sum the division's result. Find the smallest divisor such that the result mentioned above is less than or equal to threshold.

Each result of the division is rounded to the nearest integer greater than or equal to that element. (For example: 7/3 = 3 and 10/2 = 5).

It is guaranteed that there will be an answer.

class Solution:

def smallestDivisor(self, nums: List[int], threshold: int) -> int:

def possible(divisor):

running_sum = 0

for num in nums:

running_sum += -(num // -divisor)

if running_sum > threshold:

return False

return True

left = 1

right = max(nums)

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

This problem is quite similar to LC 875. Koko Eating Bananas. Our solution space is a range of possible divisors, and our goal is to minimize the divisor so that the running sum obtained by dividing each number in nums by divisor (and perforing the subsequent rounding up to the nearest integer) never exceeds threshold.

If we can achieve this task for divisor, then we can definitely achieve the same task by increasing the value of divisor (e.g., divisor + 1). Our goal, then, is to find a divisor such that the task is possible but as soon as we decrease the value of divisor the task becomes impossible.

What would the minimum divisor be for our solution space? We're told it must be a positive integer; hence, we set left = 1. What about the maximum divisor? Since each division result gets rounded up to the nearest integer, the smallest the running sum could be would occur if we chose the divisor to be the maximum value in nums. Then no division would result in a value greater than 1, and since we're told nums.length <= threshold <= 10^6, we let right = max(nums).

LC 410. Split Array Largest Sum (✓) ★★★

Given an array nums which consists of non-negative integers and an integer m, you can split the array into m non-empty continuous subarrays.

Write an algorithm to minimize the largest sum among these m subarrays.

class Solution:

def splitArray(self, nums: List[int], k: int) -> int:

def possible(max_sum):

num_subarrays = 0

subarray_sum = 0

idx = 0

while idx < len(nums):

if nums[idx] > max_sum:

return False

subarray_sum += nums[idx]

if subarray_sum > max_sum:

subarray_sum = nums[idx]

num_subarrays += 1

if num_subarrays > k:

return False

idx += 1

return (num_subarrays + 1) <= k

left = 0

right = sum(nums)

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

This problem is excellent and somewhat similar to LC 1231. Divide Chocolate in nature. The tip off that the problem may involve binary searching on a solution space is given by the fact if a subarray sum subarray_sum works as a solution to the problem then increasing the value of subarray_sum will certainly work as well. Our goal is to find a subarray sum that, when decreased at all, results in not being able to fulfill the requirements of the problem. All of this implies we should be able to conduct a binary search on the solution space of minimum subarray sum values.

What would the smallest possible subarray sum value be? Since values of 0 are allowed in nums, we should set left = 0. What about the largest possible subarray sum value? No subarray can have a larger sum than the entire array; hence, we set right = sum(nums).

The harder part for this problem is designing the possible function effectively. The intuition is that we construct the k subarray sums in a greedy fashion, where we keep adding to one of the k subarrays until the given max_sum has been exceeded, at which point we move on to constructing the next subarray sum. If the number of subarrays we must use to accomplish this ever exceeds k, then we issue an early return of false. If, however, we process all values and the total number of subarrays used is less than or equal to k, then we return true because k will always be less than or equal to nums.length per the constraint 1 <= k <= min(50, nums.length); that is, if somehow we've processed all values in nums and have never exceeded max_sum for a subarray sum and the number of subarrays used is much smaller than k, then we can simply distribute the values in the subarrays to fill up the remaining empty subarrays until the total number of subarrays equals k (the sum of the subarrays from which values are borrowed can only decrease due to the constraint 0 <= nums[i] <= 10^6).

LC 1482. Minimum Number of Days to Make m Bouquets★★

Given an integer array bloomDay, an integer m and an integer k.

We need to make m bouquets. To make a bouquet, you need to use k adjacent flowers from the garden.

The garden consists of n flowers, the ith flower will bloom in the bloomDay[i] and then can be used in exactly one bouquet.

Return the minimum number of days you need to wait to be able to make m bouquets from the garden. If it is impossible to make m bouquets return -1.

class Solution:

def minDays(self, bloomDay: List[int], m: int, k: int) -> int:

def possible(days):

bouquets_formed = flower_count = 0

for flower in bloomDay:

if flower <= days:

flower_count += 1

else:

flower_count = 0

if flower_count == k:

bouquets_formed += 1

flower_count = 0

if bouquets_formed == m:

return True

return False

# not enough flowers for required number of bouquets

if len(bloomDay) < (m * k):

return -1

left = min(bloomDay)

right = max(bloomDay)

while left < right:

mid = left + (right - left) // 2

if possible(mid):

right = mid

else:

left = mid + 1

return left

As usual, the primary difficulty in this problem is identifying it as having a nice binary search solution. The idea is to binary search on the solution space where the solution space is identified as being binary searchable as follows: if I can form m bouquets in d days, then I can definitely form m bouquets in > d days. We want to find the minimum number for d such that trying to form m bouquets in any fewer days is impossible. We can binary search for that number of days, as shown above.

LC 1231. Divide Chocolate (✓) ★★★

You have one chocolate bar that consists of some chunks. Each chunk has its own sweetness given by the array sweetness.

You want to share the chocolate with your K friends so you start cutting the chocolate bar into K+1 pieces using K cuts, each piece consists of some consecutive chunks.

Being generous, you will eat the piece with the 8* and give the other pieces to your friends.

Find the maximum total sweetness of the piece you can get by cutting the chocolate bar optimally.

class Solution:

def maximizeSweetness(self, sweetness: List[int], k: int) -> int:

def possible(min_sweetness):

pieces = 0

piece_sweetness = 0

for chunk_sweetness in sweetness:

piece_sweetness += chunk_sweetness

if piece_sweetness >= min_sweetness:

pieces += 1

piece_sweetness = 0

if pieces == k + 1:

return True

return False

left = 1

right = sum(sweetness) + 1

while left < right:

mid = left + (right - left) // 2

if not possible(mid):

right = mid

else:

left = mid + 1

return left - 1

This is such a fantastic problem, but it is quite difficult. As usual (for binary search problems on solution spaces anyway), constructing the possible function is where much of the difficulty lies. Our goal is to ensure we can actually come up with k + 1 pieces of chocolate to distribute where each piece meets or exceeds the required min_sweetness threshold. If we can do that, then we're in business. But, of course, as the problem indicates, we want to maximize the minimum sweetness of our own piece of chocolate (since all other pieces distributed must have the same or more sweetness compared to our own).

Hence, we need to binary search on a range of possible minimum sweetness values. What would the smallest possible sweetness be? We're told from the constraint that every chunk of the chocolate has a sweetness of at least 1; hence, we set left = 1. What about the largest possible sweetness? Note that k == 0 is possible, which means there's a possibility where we need to share the chocolate bar with no one — in such a case, we would want to consume all of the sweetness, sum(sweetness). But since we're binary searching a solution space for a maximum value, we need to set right = sum(sweetness) + 1 as opposed to right = sum(sweetness). Why? Because we might miss the maximum value in an off-by-one error otherwise; for example, consider the input sweetness = [5,5], k = 0. The while loop terminates once left == right and right == sum(sweetness), but we return left - 1, which is equal to 10 - 1 == 9 instead of the obviously correct answer of 10.

LC 1552. Magnetic Force Between Two Balls★★

In universe Earth C-137, Rick discovered a special form of magnetic force between two balls if they are put in his new invented basket. Rick has n empty baskets, the ith basket is at position[i], Morty has m balls and needs to distribute the balls into the baskets such that the minimum magnetic force between any two balls is maximum.

Rick stated that magnetic force between two different balls at positions x and y is |x - y|.

Given the integer array position and the integer m. Return the required force.

class Solution:

def maxDistance(self, position: List[int], m: int) -> int:

def possible(min_mag_force):

balls_placed = 1

prev_ball_pos = position[0]

for idx in range(1, len(position)):

curr_ball_pos = position[idx]

if curr_ball_pos - prev_ball_pos >= min_mag_force:

balls_placed += 1

prev_ball_pos = curr_ball_pos

if balls_placed == m:

return True

return False

position.sort()

left = 1

right = (position[-1] - position[0]) + 1

while left < right:

mid = left + (right - left) // 2

if not possible(mid):

right = mid

else:

left = mid + 1

return left - 1

The problem description is one of the hardest things about this problem. But after fighting to understand it, our thinking will gradually start to resemble what's included in the hints and point us to binary search on the solution space as a good strategy:

Hint 1: If you can place balls such that the answer is x,

then you can do it for y where y < x.

Hint 2: Similarly, if you cannot place balls such that

the answer is x then you can do it for y where y > x.

Hint 3: Binary search on the answer and greedily see if it is possible.

This problem is quite similar to LC 1231. Divide Chocolate in many ways, but instead of trying to maximize the sweetness of our least sweet piece of chocolate amongst k friends, we are trying to maximize the magnetic force between the least magnetically attracted pair of balls. The idea is to binary search on possible answer values for the minimum magnetic force required and to maximize that value as much as possible.

Hence, our first task is to build a possible function to determine whether or not the task at hand is possible for some given magnetic force, and we greedily try to determine the possibility; that is, we place a ball whenever the magnetic force provided has been met or exceeded (this ensures the magnetic force provided is, indeed, minimum). All balls placed must have at least min_mag_force magnetic force between them. Our goal is to maximize that value.