Dynamic programming (with attitude)

This post explores dynamic programming. As usual, the Fibonacci numbers are used to introduce several key components of dynamic programming. This sets the stage for more advanced dynamic programming problems: rod cutting, subset sum, and Floyd-Warshall.

The notes below come from the Algorithms with Attitude YouTube channel, specifically the Dynamic Programming playlist comprised of the following videos: Introduction to Dynamic Programming: Fibonacci Numbers, Rod Cutting, Subset Sum, and Floyd-Warshall All-Pairs Shortest Paths: A Dynamic Programming Approach.

There are several sections in this post, accessible in the table of contents on the right, but most of the sections have been listed below for ease of reference and navigation.

- Introduction to dynamic programming - Fibonacci numbers

- The rod cutting problem

- The subset sum problem

- Floyd-Warshall

Introduction to dynamic programming - Fibonacci numbers

Fibonacci number definition

Let's go ahead and start with a definition of the Fibonacci numbers:

Simple recursive algorithm

Unsurprisingly, we can implement a simple recursive program to calculate the th Fibonacci number:

Fib(n) {

if (n < 2)

return n

return Fib(n - 1) + Fib(n - 2)

}

And it works! But there's a problem. It's super slow. To see this for ourselves, consider the Python implementation below that uses the Piston API for code execution. We can calculte up to roughly the 33rd Fibonacci number, but it times out and chokes when trying to calculate the 34th Fibonacci number:

Click "Run Code" to see the output here

As shown above, even calculating the 33rd Fibonacci number takes a good bit of time. It's certainly not quick.

Recursion tree

Let's take a look at the recursion tree for running Fib(8):

The call tree above reflects the order in which Fib calls were made; that is, if we make the call to the previous Fibonacci number first (i.e., Fib(n - 1)), then the left spine of the tree has length 7; similarly, each time we have a second recursive call, the value goes down by 2 instead of 1 (i.e., Fib(n - 2)); hence, the right spine of the tree will have length 4:

More generally, the right spine has length about so the top levels of the tree are complete; that is, each of the levels is a fully binary tree:

The tree has at least nodes, where each node represents a call to Fib. That's exponential. Hence, we have a runtime of ; more precisely: .

But there's hope. Note the two subtrees rooted at 5 in the middle of the tree:

These subtrees are exactly the same. We're just repeating the same work. Not only that but there's an another subtree rooted at 5 all the way on the left (left child of 6 on left spine). Every one of those subtrees computes the 5th Fibonacci number, and this number is always 5. It's kind of dumb to repeat all of that work three separate times. Subtrees rooted at 4 may be smaller, but it gets repeated five times!

This explains the motivation behind the first big idea of dynamic programming: remember the values you have recursively computed! So that you don't repeat any work.

Memoized algorithm

Fibonacci(n) {

Allocate Ans[0...n] = NIL everywhere

Ans[0] = 0

Ans[1] = 1

return Fib(n, Ans)

}

Fib(n, Ans[]) {

if (Ans[n] == NIL)

Ans[n] = Fib(n - 1, Ans) + Fib(n - 2, Ans)

return Ans[n]

}

We can add a dummy wrapper Fibonacci (line 1) that allocates a table to hold all the values (line 2). And then we can just slightly change the main program to check the table for an answer before making any recursive calls (line 9). If the answer was not stored in the table, then make the recursive calls, but, critically, save the value (line 10) before returning it (line 11). We could have kept the same base cases as before, but we instead incorporated them directly into the tables (lines 3 and 4).

If we implement the new pseudocode above, then we see that calculating the 34th Fibonacci is no longer a problem. Even calculating something as large as the 300th Fibonacci number isn't an issue:

Click "Run Code" to see the output here

Pruned recursion tree

What does the effect of "remembering recursively computed values" have on the recursion tree? Let's find out. Here's how our "remembering table" starts (a value of -1 represents a null value, and the base case values have been filled in):

The recursion tree starts filling out much as before:

Within the table, the recursive calls shown above in the recursion tree start from the right and keep moving left towards the base cases:

Once we actually get down to the base cases

the table starts filling in (from the left and in order):

In the recursion tree, the left spine looks exactly the same as before, and each of the first-time calls we make to compute the th value has a subtree:

But once we leave that spine, the second time we try to compute the th value, we just look up the answer without any recursive calls, essentially pruning that subtree down to just a leaf. For example, in the recursion tree shown above, suppose we've traveled down the spine, and we're working our way back up, and we're about to make the recursive call to fib(3) from fib(5):

But we've already computed the third Fibonacci number! Our lookup table allows us to compute fib(3) in constant time, making it possible for us to not repeat any work (i.e., the subtree to the right of fib(5) is pruned to just be a leaf now).

The process illustrated above is the main idea behind dynamic programming. It's not "programming by James Bond" — it gets its name partially from dynamic memory allocation used to store the recursive subproblem answers. The table that holds the recursive subproblem answers is like a well-organized bunch of post-it notes or memos. The method above is fittingly called memoization (not memorization). It allows us to keep the same natural recursive structure of our code, but it keeps us from recomputing values.

Alternate version (order of recursively computed values)

It's worth looking at a very slightly different version of the program with just a switch in the order of our recursive calls:

Fib(n) {

if (n < 2)

return n

return Fib(n - 1) + Fib(n - 2)

}

Fib(n) {

if (n < 2)

return n

return Fib(n - 2) + Fib(n - 1)

}

What happens to the recursion tree? For the un-memoized version, it looks the same as before, except that now the long spine is on the right:

But for the memoized version, the tree will actually look different (it's decrementing by values of 2 instead of 1 down the left spine):

In the table, we see recursive calls skipping every other value:

And then calling those values as we start to fill in lower values; that is, consider what happens once we get to the following point in the recursion tree, where we've worked our way back up to the subtree rooted at 4, where it's now time to make the second recursive call:

What gets called here? It's fib(n - 1), not fib(n - 2), since we changed the order of the recursive calls; hence, we call fib(3). What gets called first from fib(3)? It's fib(1), which is a base case, and the second recursive call on the right is fib(2), which is not a base case. But we've already computed fib(2) on the left spine and its value is available for reference in our lookup table. We do not repeat the same work. The tree structure just looks slightly different:

The final recursion tree for this altered version looks like the following:

This looks a good bit different from the recursion tree for the original memoized version:

The recursion tree for the altered version is only half as deep as the original version (4 total levels instead of 7), but the tree width is now twice as wide (maximum of 4 nodes on a level instead of 2). Note how both trees have the exact same number of nodes even though they are arranged differently.

Table fill order

For the memoized implementations we've been discussing, both the original version and the altered version, even though the recursive calls are made in different orders (and have very different looking recursion trees), the table gets filled in exactly the same order as before, from left to right, one at a time. We can verify this for ourselves for by adding a print statement to print the most recently found Fibonacci number once it's been added to the table:

Click "Run Code" to see the output here

Why does the table get filled in exactly the same order for both versions? Since the altered version results in a recursion tree that's a bit easier to draw, let's use that as the basis for our discussion. If we call fib(14) using the altered version, then we get the following recursion tree:

And our completed table looks as follows:

What we want to figure out is the why behind the second bullet point here:

- The original and altered versions call subproblems in different orders, that is,

fib(n - 1) + fib(n - 2)for the original andfib(n - 2) + fib(n - 1)for the altered. - Both versions complete subproblems and fill in the table from left to right in the exact same order.

- Each answer needs the answer to its left before it can be computed.

Let's focus on one arbitrary value from our table, somewhere in the middle. Let's say we want to focus on the 9th Fibonacci number. The 9th Fibonacci number depends on the 7th and 8th Fibonacci numbers:

Hence, our program needs to finish computing the 7th and 8th Fibonacci numbers before computing the 9th Fibonacci number no matter what order the recursive calls are made. We can imagine that completing each of the recursive calls is a "prerequisite" for the current value we're computing; that is, the call fib(x) has recursive calls fib(x - 1) and fib(x - 2) as prerequisites or dependencies that need to be resolved before fib(x) can actually be computed.

It's informative to look at the dependencies for each call. Doing so lets us see the entire chain of dependencies for the whole graph:

Iterating to eliminate recursion

Above, we can see that each subproblem gets pointed to by its prerequisites. The ordering suggests we may be able to tackle this problem iteratively instead of recursively by simply filling in the table in an order that finishes prerequisites for any node before getting to that node:

Fibonacci(n) {

Allocate Ans[0...n]

Ans[0] = 0

Ans[1] = 1

for(i = 2; i <= n; i++) {

Ans[i] = Ans[i - 2] + Ans[i - 1]

}

return Ans[n]

}

Since our table is just a one-dimensional array and each value needs the one to its left to be finished before it, there's only one way to fill in the table from left to right. But more complex dynamic programming problems will have multi-dimensional tables with more choices.

Once we know what order the table needs to be filled in, we can just fill it in that order. This gives us the iterated, so-called "bottom-up" version of the program instead of the memoized, recursive, so-called "top-down" version:

Click "Run Code" to see the output here

Above, we use a simple loop through one index to fill the table. Filling in each space takes constant time so it takes total linear time: .

Optimizing space

If the runtime of the iterated version shown above ("bottom-up") is , and the runtime of the memoized recursive version we started with ("top-down") is also , then why bother? First of all, the iterative version is faster — it replaces the overhead of recursive function calls with a simple loop. More importantly, it lets us look at the table more carefully to see if we actually need the entire table.

When computing the 9th Fibonacci number using the iterative version, we used the 7th and 8th Fibonacci numbers in the table, but after that we never looked at the 7th value again or any value below the 7th value. We basically went from one extreme of not remembering anything (i.e., the non-memoized recursive approach) to the other extreme of remembering everything forever (i.e., using a table to store recursively computed values).

It's okay to forget stuff you don't need anymore. That's the idea behind space optimization for dynamic programming problems. The following two sections show the details for how to go about doing this for the specific problem we've been discussing.

Modular indexing

We can use modular arithmetic to come up with an effective modular indexing scheme for our table. Instead of storing a table of all recursively computed values, we could just store a table of all previously computed values that are required for computing the current, subsequent value. Let the size of our table be k, where k denotes the number of previously computed values that are needed in order to compute the current value. For calculating Fibonacci numbers, we have k = 2. We can construct a solution as follows:

Fibonacci(n) {

k = 2

Allocate Ans[0...k-1]

Ans[0] = 0

Ans[1] = 1

for(i = k; i <= n; i++)

Ans[i % k] = Ans[(i - 2) % k] + Ans[(i - 1) % k]

return Ans[n % k]

}

How exactly does this work? The idea is that, when computing fib(i), the explicit indices (i - 2) % k and (i - 1) % k are used to access the exact positions where the previous Fibonacci numbers are stored, namely (i - 2) % k for fib(i - 2) and (i - 1) % k for fib(i - 1). We use the assignment Ans[i % k] = ... to overwrite what we no longer need. How do we know where the final value to return will be located? Note that the for loop is terminated after assigning Ans[i % k] when i = n; that is, the last value assigned, which is what we ultimately need to return, is located at Ans[n % k].

It's helpful to see the pseudocode above in action. An implementation in Python serves as an acceptable solution on LeetCode for the following problem: LC 509. Fibonacci Number.

class Solution:

def fib(self, n: int) -> int:

k = 2

ans = [None] * k

ans[0] = 0

ans[1] = 1

for i in range(k, n + 1):

ans[i % k] = ans[(i - 2) % k] + ans[(i - 1) % k]

return ans[n % k]

It's worth noting that we can dispense with some of the modular indexing above by always summing ans[1] and ans[0] without considering their specific positions relative to fib(i); that is, the positions of the last two Fibonacci numbers within ans shift with each iteration, and we implicitly rely on the assumption that ans[1] and ans[0] always contain the last two Fibonacci numbers, regardless of their order:

class Solution:

def fib(self, n: int) -> int:

k = 2

ans = [None] * k

ans[0] = 0

ans[1] = 1

for i in range(k, n + 1):

ans[i % k] = ans[1] + ans[0]

return ans[n % k]

This version may seem nicer on the surface, but we arguably lose clarity instead of gaining it; for example, ans[(i - 2) % k] always contains fib(i - 2) in the first version whereas the location of fib(i - 2) becomes unpredictable in the second version.

Variable assignment

A more common approach for optimizing space when computing Fibonacci numbers is to use variable assignment instead of modular indexing and to not maintain a table at all (this is arguably more performant since no modulo operation is needed):

Fibonacci(n) {

prev = 0

curr = 1

for (i = 2; i <= n; i++) {

tmp = prev + curr

prev = curr

curr = tmp

}

return curr

}

Implementing the approach above for the same LeetCode problem (i.e., LC 509) is quite simple:

class Solution:

def fib(self, n: int) -> int:

if n <= 1:

return n

prev = 0

curr = 1

for _ in range(2, n + 1):

tmp = prev + curr

prev = curr

curr = tmp

return curr

For Python specifically, we can take advantage of its tuple unpacking feature to make the variable assignments even cleaner:

class Solution:

def fib(self, n: int) -> int:

if n <= 1:

return n

prev = 0

curr = 1

for _ in range(2, n + 1):

prev, curr = curr, prev + curr

return curr

Additional example - Tribonacci numbers

It's probably not surprising that there are generalizations of Fibonacci numbers such as "tribonacci numbers", "tetranacci numbers", etc. Let's solve the following LeetCode problem using all space optimization techniques discussed above: LC 1137. N-th Tribonacci Number.

class Solution:

def tribonacci(self, n: int) -> int:

k = 3

ans = [None] * k

ans[0] = 0

ans[1] = 1

ans[2] = 1

for i in range(k, n + 1):

ans[i % k] = ans[(i - 3) % k] + ans[(i - 2) % k] + ans[(i - 1) % k]

return ans[n % k]

class Solution:

def tribonacci(self, n: int) -> int:

k = 3

ans = [None] * k

ans[0] = 0

ans[1] = 1

ans[2] = 1

for i in range(k, n + 1):

ans[i % k] = ans[2] + ans[1] + ans[0]

return ans[n % k]

class Solution:

def tribonacci(self, n: int) -> int:

if n <= 2:

return 0 if n == 0 else 1

prev_prev = 0

prev = 1

curr = 1

for _ in range(3, n + 1):

tmp = prev_prev + prev + curr

prev_prev = prev

prev = curr

curr = tmp

return curr

class Solution:

def tribonacci(self, n: int) -> int:

if n <= 2:

return 0 if n == 0 else 1

prev_prev = 0

prev = 1

curr = 1

for _ in range(3, n + 1):

prev_prev, prev, curr = prev, curr, prev_prev + prev + curr

return curr

Dynamic programming template

The simple problem of calculating Fibonacci numbers does a great job of introducing the core components of dynamic programming problems, namely memoization for recursive top-down solutions and tabulation for iterative bottom-up solutions. We also looked at space optimization — this is more of a "garnish" than anything else, as it may not be possible to optimize for space in more complex problems. We did not look at one more possible "garnish" for dynamic programming problems: reconstructions. Sometimes it doesn't make sense to reconstruct anything (e.g., the problem we've been working on, calculating Fibonacci numbers), but sometimes it does; for example, maybe we don't want to know just the length of the longest increasing subsequence (LIS) but also the actual LIS itself. This usually requires us to store more information when executing an iterative bottom-up approach.

Everything described above leads us to a few standard steps for solving dynamic programming problems. The steps below may be thought of as a "template":

-

Get a recursive solution

- This is often the hardest part of dynamic programming problems because we're not usually given the recurrence relation. We have to come up with one that effectively models the problem at hand.

- Problems like calculating Fibonacci numbers that give you the recurrence relation on a platter are much easier to approach.

-

Parameter analysis

- How many distinct parameter combinations are there for solving subproblems?

- Are there few enough to store answers for each combination of parameters?

-

Memoize

- Allocate a table to hold stored answers.

- Before running recursive code, check if you have computed the answer.

-

Move to iterative version

- For a given answer, what answers does it depend upon?

- Figure out order for indices to fill in answers after things they depend upon.

-

Garnish

- Can you reuse space? Optimize for space.

- Do you need to store extra information for a constructive answer?

The rod cutting problem

Problem definition

Given: inch steel rod, prices by integer length.

Find: Maximum revenue we can generate by cutting and selling it.

Example: How should we cut an 8 inch rod to maximize its sale price according to the following price table:

Rods-Я-Us Prices:

Dynamic programming template

Recall the following template for dynamic programming (DP) problems:

-

Get a recursive solution

- This is often the hardest part of dynamic programming problems because we're not usually given the recurrence relation. We have to come up with one that effectively models the problem at hand.

- Problems like calculating Fibonacci numbers that give you the recurrence relation on a platter are much easier to approach.

-

Parameter analysis

- How many distinct parameter combinations are there for solving subproblems?

- Are there few enough to store answers for each combination of parameters?

-

Memoize

- Allocate a table to hold stored answers.

- Before running recursive code, check if you have computed the answer.

-

Move to iterative version

- For a given answer, what answers does it depend upon?

- Figure out order for indices to fill in answers after things they depend upon.

-

Garnish

- Can you reuse space? Optimize for space.

- Do you need to store extra information for a constructive answer?

Previously, when calculating Fibonacci numbers, we were gifted the recurrence relation. But here we need to somehow think of a good way to recursively break down the problem. This is almost certainly the hardest part, especially if we don't have much experience in designing recursive programs. How do we start? To get started with designing a recursive DP algorithm, we need to think of how we can break the problem down into smaller, similar-looking problems.

Recursive solution design

How do we go about trying to design a recursive solution for this rod cutting problem? Well, first recall that all recurrences must have base cases. What do base cases represent? They represent the absolutely simplest scenarios we can dream up for whatever situation we're considering. They can almost be viewed as "atomic subproblems" because we can't split such subproblems into anything smaller or simpler (too bad nuclear fission kind of ruined the "can't split the atom" phrase).

Returning to our example, if we're trying to maximize the revenue we can generate from cutting up the rod and selling it, then what choices do we have from the outset? This is actually quite simple: sell it whole or cut it. Selling it whole is very simple, but if we should cut it, then where should we cut it? If we decide to cut the rod, then notice what happens: we end up with two smaller rods. The subsequent problems of cutting the smaller rods look exactly like the original problem of cutting the original rod. For smaller rods, we want to maximize how much we can get for each of them in order to maximize how much we can get for both of them combined. The fact that both of those subproblems look just like the original problem is key for DP.

A slightly different way to think about it is to pick a length of a rod to sell, cut a piece of that length to sell whole, and then recursively figure out how to cut and sell whatever's left after that. We essentially have the following approaches. If we cut into:

- two rods, then recursively solve each one.

- one piece to sell whole and a remainder, then recursively solve the remainder. For this second approach, we can come up with another variant:

- the longest piece to sell and a remainder, then recursively solve the remainder. (This approach adds an extra parameter, the longest piece we can sell.)

Of the three possibilities above, we'll use the second approach. The last two approaches both have a clean visualization and a solution with one recursive part instead of two. But the second approach has simpler parameters. For the next DP problem we consider, the subset sum problem, we'll consider several different approaches.

Since we've decided on the second approach, let's start thinking about how that approach will work. If we are going to have one piece to sell whole, then what length should that piece be? Remember, for this second approach, our goal is to recursively solve the "remainder" left behind after cutting off the first piece. So we have to start with something for the first piece. Suppose we're inspired to start out by first cutting off 2 inches from the 8 inch rod.

So we get whatever the price was for 2 inches and then recursively figure out how much we can get for remaining inches:

Optimal(n) = Price[2] + Optimal(n - 2)

We don't try to algorithmically solve the smaller problem, Optimal(n - 2). Once we get something smaller than the original problem, Optimal(n), we just assume that recursion magically solves the remaining instance for us. As long as we have a base case, which we'll come back to. Now suppose we feel like we're going in the wrong direction with a 2 inch cut and decide to go with a 4 inch cut:

Optimal(n) = Price[4] + Optimal(n - 4)

Oops. Maybe we realize we want to try starting by cutting off 7 inches:

Optimal(n) = Price[7] + Optimal(n - 7)

The point is that any of our starting decisions may result in a "bad" decision we can't recover from since local decisions (i.e., what length to cut now) effect global decisions (i.e., what lengths we can possible cut in the future). Hence, instead of cutting off 2, 4, or even 7 inches, let's cut off i inches, which leaves us with a length i rod to sell and a remaining rod of length n - i to recursively solve:

Optimal(n) = Price[i] + Optimal(n - i)

So how do we figure out what value of i we need to use? We showed above that any one of them we start with could go wrong. So let's just try them all. For every value of i, from 1 up through n, figure out how much we can make by selling a rod of length i, and then recursively cutting up whatever's left over.

Recursive solution

We don't try to get smart with our first cut. We just choose to consider every possibility. Even more importantly, once we recursively have a smaller problem, let's just assume that it's solved for us. Don't try to do anything with it except think of the base case. The simplest base case here is just a rod of length 0 (no one is going to pay for that; so the price for a length 0 rod ... is 0).

We immediately encounter our base case if we decide to sell the whole rod of length n at the beginning, leaving us with 0. More importantly though, we can see how this base case applies for future subproblems. That is, maybe we've worked our way down to having a rod of length n - k. If we sell the entire rod of length n - k, then, of course, we are left with 0.

Don't try to do too much when we start. Just get a working answer first. This is the hardest step in coming up with our own DP solutions. Do the first part simply and don't worry about efficiency yet. That comes later. That said, let's write down our recursive program:

RodCutting(length, Price[])

if (length == 0)

return 0

max = -inf

for (i = 1; i <= length; i++)

tmp = Price[i] + RodCutting(length - i, Price)

if (tmp > max)

max = tmp

return max

The following is a working implementation of the pseudocode above in Python:

Click "Run Code" to see the output here

Recursive tree

Let's see what the recursion tree looks like for our pseudocode above if we run it on a small sample problem of length 5:

Each node tells us the length of the rod, and it stores the best answer it has seen from all the possibilities it's tried so far. When it finishes, the final value is placed on the edge leading from that recursive call.

For example, this is how the recursion starts:

Once we hit the base case of 0, we backtrack to 1, which, if sold, yields a price of 2; hence, the node has the label 1:2 to indicate a length of 1 and the best answer it's seen so far from all the possibilities is 2, whose final value is placed on the edge leading from that recursive call. Above, the node labeled 1:2 has edge label 2 leading back up to the node whose label is 2 since it has not been fully explored yet.

We update the node currently labeled 2 to 2:4 since that is the best value currently seen (a rod of length 2 cut into two rods of length 1 will yield prices of 2 + 2 = 4):

But, if we instead elect to not cut the rod of length 2 into a rod of length 1, then we will hit the base case of length 0:

Since selling a rod of length 2 by itself has a price of 5, which is greater than selling two rods of length 1 with price 2 + 2 = 4, we update the node label from 2:4 to 2:5, and then we use this value of 5 to label the edge leading back from this node to the node currently labeled 3 that is higher in the tree:

The labelling scheme is the same for the rest of the recursion tree.

We can see from the full recursion tree above that even for a rod length as small as 5, we solve the subproblem for a rod of length 2 four separate times:

We hit the 0 leaf sixteen times, once for each possible way of cutting the rod. At each of the one-inch marks, we can make a cut or not. So for a length n rod, there are 2^(n - 1) possibilities. For example, with n = 5, we can cut at the following one-inch marks: 1, 2, 3, 4. A cut anywhere else is not possible (we only permit integer cuts and "cutting" at 0 or 5 is not cutting at all but keeping the whole rod). Hence, for an n-inch rod, we can choose to cut or not (two choices) at the following inch-marks: 1, 2, ... , n - 2, n - 1. Since we have two choices for each inch mark, this means we will have 2 x 2 ... x 2 x 2 (n - 1 times) possibilities (i.e., 2^(n - 1)). In the context of the recursion tree, a "possibility" concludes once there are no longer any cuts to make — this happens once we hit a leaf node of 0, which indicates the base case. Hence, there are 2^(n - 1) leaf nodes in our recursive tree, which correspond to recursive calls, making the runtime of our program exponential.

While the recursive tree for a rod of length 5 isn't too big, it already starts to look a bit crazy for a rod of length 7:

Parameter analysis

When we think about what the recursive calls look like, there's only one changing parameter: how much of the rod we have left to sell. This is always an integer from 0 up through whatever we started with. So it can't have that many values, just a linear number of them. Essentially, we have the following for our parameter analysis:

Price[]never changes0 <= length <= nfor initial value ofn(rod length)

This tells us we can make a table to store the best price for every possible length. This means we can move to a memoized version of our algorithm.

Memoized top-down DP approach

First recall our non-memoized approach:

RodCutting(length, Price[])

if (length == 0)

return 0

max = -inf

for (i = 1; i <= length; i++)

tmp = Price[i] + RodCutting(length - i, Price)

if (tmp > max)

max = tmp

return max

Our memoized approach looks very similar, the main adjustments being the introduction of our table and the subsequent referencing and updating (all changes highlighted):

RC(length, Price[])

allocate Table[0...length] = -inf everywhere

Table[0] = 0

RodCutting(length, Price, Table)

return Table[length]

RodCutting(length, Price[], Table[])

if (Table[length] != -inf)

return Table[length]

max = -inf

for (i = 1; i <= length; i++)

tmp = Price[i] + RodCutting(length - i, Price)

if (tmp > max)

max = tmp

Table[length] = max

return max

- Lines

1-5: Set up the table, including pre-filling the base case. - Lines

8-9: Before making any recursive calls, just look at the table to see if we've already solved the best price for the length of rod we're currently considering. If so, don't recompute the answer — just return it. If not, then recursively figure out the answer. - Line

15: Store the recursive answer just figured out above before returning it.

We can actually clean up the code for our main procedure to be a bit more streamlined now that we're using a table to track maximum revenue values:

RC(length, Price[])

allocate Table[0...length] = -inf everywhere

Table[0] = 0

RodCutting(length, Price, Table)

return Table[length]

RodCutting(length, Price[], Table[])

if (Table[length] == -inf)

for (i = 1; i <= length; i++)

tmp = Price[i] + RodCutting(length - i, Price)

if (tmp > Table[length])

Table[length] = tmp

return Table[length]

If we the implement the cleaned up code in Python, then we can confirm the output values for all rod-cutting examples in this post (the length of 8 will be considered when we look at a bottom-up iterative approach):

Click "Run Code" to see the output here

Memoized recursive tree

If we now look at the same recursion tree for length 5, then just like with our first Fibonacci version, every node that isn't on the left spine of the tree has its children pruned because all of the values get computed on that spine:

We store a linear number of answers and our computation goes much more quickly than before.

Now we have a decent memoized version of our algorithm. Thinking about our table, in what order does it get filled? In order to fill in any box, we will directly depend on all of the boxes to its left because it directly makes recursive calls to all of them (line 9 in our cleaned up pseudocode). So there's only one way to fill in the table: left to right.

Furthermore, we can already see that we won't be able to optimize for space in the same way that we did in the Fibonacci problem. In the Fibonacci problem, computing a Fibonacci number meant we needed only the previous two Fibonacci numbers. But in this case, as remarked on above, we need all values to the left of the current length value, meaning we can't afford to get rid of any of our table.

Tabulated bottom-up DP approach

As noted above, we're filling in our table directly from left to right, which suggests we might be able to solve this problem iteratively. Let's see what that approach might look like:

RodCutting(n, Price[])

allocate Table[0...n]

Table[0...n] = 0

for (length = 1; length <= n; length++)

for (i = 1; i <= length; i++)

tmp = Price[i] + Table[length - i]

if (tmp > Table[length])

Table[length] = tmp

return Table[n]

If we implement the pseudocode above in Python, we'll get something that looks like the following:

Click "Run Code" to see the output here

We can think about how long the code above takes to run. To fill in the ith position, it takes time i because we consider the maximum over i possible first cuts. And we're solving for problems i = 1 up to i = n, the length of the full size rod. That's time, which is much better than the exponential time we started with:

- Runtime: (iterative or memoized)

- Space: (iterative or memoized)

Moving to the last step, unlike the Fibonacci sequence, as remarked on above, there's not really any way to optimize for space here.

Reconstructing the optimal cuts

But for this problem we do get to see the last possible "garnish" stage of dynamic programming which was previously missing from the Fibonacci problem: solution reconstruction. That is, for this rod cutting problem specifically, we're generally not just looking for the price we can get but also the cuts we need to get that price. It would be pretty frustrating if we knew how much we could get but not how to get it.

So we'll store some extra information to reconstruct the cuts we need. For this specific problem, we'll make a second table. The change to our previous working code is fairly minimal:

RodCutting(n, Price[])

allocate Table[0...n], Cuts[0...n]

Table[0...n] = 0

for (length = 1; length <= n; length++)

for (i = 1; i <= length; i++)

tmp = Price[i] + Table[length - i]

if (tmp > Table[length])

Table[length] = tmp

Cuts[length] = i

OptimalCuts = List[]

while (n > 0)

OptimalCuts.append(Cuts[n])

n -= Cuts[n]

return OptimalCuts

When we optimized over different possible first cuts, we stored the maximum amount we could get (line 8 above), but now we also want to store which cut we used to get that maximum amount (line 9) — this value will get updated whenever the optimal price gets updated. When we're done, this second table will let us reconstruct the cuts we need to make.

Specifically, in lines 11-15 of the pseudocode above, the first time we access Cuts[n] will tell us what the length of our very first cut should be. Naturally, we subtract this amount away from n to get the length of however much rod we have remaining: n - Cuts[n]. We can assign this value to n (i.e., n -= Cuts[n]), and we can keep removing cuts until there's no more rod left to remove (i.e., while (n > 0)). Each time a portion of rod is cut, we append to OptimalCuts however much rod was just removed. This tells us the sequence of rod cuts we need to make. Of course, the order in which we make the cuts ultimately does not matter, but we need to know the frequency of whatever cuts we make (using a set for OptimalCuts instead of a list would remove this important information).

This is a bit easier to understand if we play around with a Python implementation of the pseudocode above:

Click "Run Code" to see the output here

If we insert the following print statements before the declaration of optimal_cuts, then we can see our table as well as the list of first cuts we should take for each length:

print(table)

print(cuts)

We'll see the following (formatted manually for clarity):

# L = 0 1 2 3 4 5 6 7 8 rod length L

[0, 2, 5, 9, 11, 14, 18, 20, 23] # maximum revene that can be generated by different cuts

[0, 1, 2, 3, 1, 2, 3, 1, 2] # first cut for rod of length L

The table above lets us reconstruct the optimal cuts needed for each example length we've considered in this post: 8, 7, and 5.

-

Rod length

8:- First cut:

2. This leaves us with8 - 2 = 6inches of rod. - Second cut ("first cut" for a rod of length

6):3. This leaves us with6 - 3 = 3inches of rod. - Third cut ("first cut" for a rod of length

3):3. This leaves us with3 - 3 = 0inches of rod. - We have no more rod to sell (base case) and thus conclude selling.

Illustration:

- First cut:

-

Rod length

7:- First cut:

1. This leaves us with7 - 1 = 6inches of rod. - Second cut ("first cut" for a rod of length

6):3. This leaves us with6 - 3 = 3inches of rod. - Third cut ("first cut" for a rod of length

3):3. This leaves us with3 - 3 = 0inches of rod. - We have no more rod to sell (base case) and thus conclude selling.

Illustration:

- First cut:

-

Rod length

5:- First cut:

2. This leaves us with5 - 2 = 3inches of rod. - Second cut ("first cut" for a rod of length

3):3. This leaves us with3 - 3 = 0inches of rod. - We have no more rod to sell (base case) and thus conclude selling.

Illustration:

- First cut:

In summary,

- runtime

- space

- Reconstruct cuts in time linear in number of cuts for optimal set

The subset sum problem

Problem definition

The subset sum problem (SSP) is a very well-known problem that may be stated as follows. We're going to look at one of the simplest versions here, which may be considered to be a special case of the knapsack problem:

- Given: A set of positive integers and positive integer

- Answer: True if and only if there exists a subset of that sums to

- Example:

- For :

true; among several possibilities: - For :

false(just because)

- For :

Note that the "set" is allowed to have repeated values. In much of the work we do below, we'll index items in from to in order to provide cleaner notation, but nothing changes conceptually (it's a little bit more nuanced than this, but we'll get into the details when we take a look at memoizing the second recursive algorithm).

Dynamic programming outline

Let's recall our DP template:

-

Get a recursive solution

- This is often the hardest part of dynamic programming problems because we're not usually given the recurrence relation. We have to come up with one that effectively models the problem at hand.

- Problems like calculating Fibonacci numbers that give you the recurrence relation on a platter are much easier to approach.

-

Parameter analysis

- How many distinct parameter combinations are there for solving subproblems?

- Are there few enough to store answers for each combination of parameters?

-

Memoize

- Allocate a table to hold stored answers.

- Before running recursive code, check if you have computed the answer.

-

Move to iterative version

- For a given answer, what answers does it depend upon?

- Figure out order for indices to fill in answers after things they depend upon.

-

Garnish

- Can you reuse space? Optimize for space.

- Do you need to store extra information for a constructive answer?

We're going to let the outline above guide our work. To start. we want to obtain a recursive solution. What would a recursive solution look like here? We're looking for a yes/no answer (i.e., true if the subset sum exists or false if it does not). But if the answer is yes, then we may also want to know which subset(s) may be used to reach the the target sum . If the answer is no, then there's not much else to know about it unless we want to know something close such as "the largest subset sum no bigger than ".

Recursive idea 1

Let's assume for a moment that the answer is yes. Recursively, we might think, "If we knew one integer that went into the sum, then we could recursively figure out how to build an answer with the remaining items to make whatever's left over from the target sum." If we want to think of it in a way somewhat similar to the rod cutting problem, then we can image trying out a value at first just because we felt like it might be promising to do so. For example, if is in the subset, then solve ; that is, we reduce the problem to trying to find a subset from that sums to . Similarly, we could be inspired to consider instead of . The process is the same: if is in the subset, then solve .

In general, if we want to include the th integer in in our potential subset sum, then the recursive question becomes, "Using the set of integers minus the th integer, can we find a subset that equals our sum minus the size of the th integer." That is, if is in the subset, then solve . We need to try all possible .

Recursive algorithm

We can write a recursive program to answer the question in the way posed above, trying all possible values of . If no value will do, then it isn't possible:

SubsetSum(S[1...n], K)

if (K == 0)

return true

if (K < 0 or |S| == 0)

return false

for x in S

S' = S - {x} (build a new set)

if (SubsetSum(S', K - x))

return true

return false

What are the base cases above? There are two:

- Lines

2-3: IfK == 0, then it's always possible to achieve this by using an empty subset. - Lines

4-5: There are two parts to this base case:- If

K < 0, then no matter what setSwe use we cannot find a subset sum that's negative becauseSis comprised of only positive integers and the empty subset is non-negative. - If

|S| == 0, then our set is empty, and it's not possible to have a positive sum with zero elements.

- If

We can implement the pseudocode above in Python to try out the example and -values mentioned above:

Click "Run Code" to see the output here

It looks like we've obtained a recursive solution. But is it efficient? A bit of reflection will show it is not.

Parameter problems

The second step of the DP template is to consider parameter analysis. If we take an arbitrary item x out of the set S, thus creating a new set S' = S - {x} for our recursive call, then note that there are a lot of possibilities for that remaining set S'. Starting with the original set S, we try to take each item out individually. From each of those sets, we again try to take out each remaining item individually. And then from each of those sets, we again try to take each remaining item out individually. Right away we see that we are going to have a lot of recursive calls.

And not just a lot of recursive calls in total but a lot of repeated calls among them. For example, suppose we take out the elements x and y from the set S in either order. Then both of those would give us the same recursive call:

If we try putting half of the items into the subset, then there are ways we can do that with the same items. That's a ton of times to repeat one recursive call.

That repeated work is a hallmark of DP and leads to the next important question: how many different, distinct ways do we call the problem? Even if we exclude repeat calls, it seems like we could get lots of distinct subsets of our original set. In the worst case, maybe we end up considering almost every possible subset of our set : . Hence, for an -element set, that's subsets. That's bad. For decently sized , we don't have time or even space to store all of the problems we want to look at. For DP, we need to have a reasonably small number of distinct calls. But we can't guarantee that here. We're probably hosed once we try to allocate a table to hold our solutions. So what do we do? We go back to our recursive solution and see if there's a way we can improve upon it.

To summarize the issues we've identified so far with our first recursive idea:

- There can be many repeated calls to the same set:

- There can also be a lot of calls to distinct sets:

We've got to improve on this if we want to handle anything bigger than the smallest of -values.

Recursive idea 2

As we've seen previously with DP problems like Fibonacci and rod cutting:

- We first come up with a recursive solution. (Step 1 of the DP template)

- Then we analyze our parameters and identify whatever repeated work we might be doing. (Step 2)

- The next step would then be to try to memoize our recursive algorithm in order to save ourselves from repeating a bunch of work. (Step 3)

But the second bullet point in the section above highlights why continuing on to step 3 is not prudent here: we have a prohibitively large number of possibly distinct recursive calls to make (exponential), rendering useless what would normally be a game changer (i.e., memoization).

Hence, we need to ask ourselves a question here: Is there a way to recursively solve the problem with fewer distinct subproblems? We've actually seen a hint. A lot of our "repeat" sets came from using the same possible sets of integers that we got to by considering integers in different orders. But we can impose our own order to remove things — we don't need dynamic programming to avoid those repeats. It's kind of a waste. Instead of guessing an index to use, we can guess the lowest or highest index to use. That will lower the number of repeated problems tremendously. So we should see what it does to the number of distinct problems too.

Should we use the lowest or the highest index? They're conceptually the same, and both choices work fine, but we're going to go with the highest index. Why? Because the notation works out more cleanly: for a set with x items, S[1, ..., x] looks cleaner than S[n - x + 1, ... , n]. So let's work from the back of the array instead of from the front of the array.

Now let's assume that we know the highest indexed integer to use. For example, if that index is , then we are going to solve ; that is, we're left using integers with indices through from the set to build , the target sum after we've subtracted the thirty-third integer. Hence, in general, if is the highest index used, then we are going to solve . With this new scheme, we will still try all possible , but we'll cut down on a lot of the repeated work.

How much better is this than our first try?

Recursive algorithm

If we always just remove integers from the high indices of the array, and we start with items in the set , then this approach only has possible different sets it will ever be called on:

That's significantly better than the possible sets we had before. That lets us write the following recursive version more cleanly. We don't actually have to change the set like before — we just add an integer parameter to let us know how much of the set we can use.

SubsetSum(S[1...n], K)

return SubsetSumRecursive(S, n, K)

SubsetSumRecursive(S[1...n], lastIndex, k)

if (k == 0)

return true

if (k < 0 or lastIndex == 0)

return false

for (i = 1; i <= lastIndex; i++)

if (SubsetSumRecursive(S, i - 1, k - S[i]))

return true

return false

We can implement the pseudocode above in Python in the following manner:

Click "Run Code" to see the output here

The Python implementation above closely mirrors the pseudocode. Specifically, we treat last_index as if the items in S are indexed from 1 through n == len(S), inclusive. This is reflected in the second base case on line 7 where we indicate we should return false if last_index == 0. In this context, if last_index == 0, then that means items 1, ... , n in S are no longer available, meaning it's not possible to sum to any positive value K.

If, however, we treat last_index as if the items in S are indexed from 0 through n - 1, then a few small adjustments are needed to our implementation code:

def subset_sum(S, K):

return ssp_recursive_idea_2(S, len(S) - 1, K)

def ssp_recursive_idea_2(S, last_index, k):

if k == 0:

return True

if k < 0 or last_index == -1:

return False

for i in range(last_index + 1):

if ssp_recursive_idea_2(S, i - 1, k - S[i]):

return True

return False

The changes above work. There's nothing wrong with doing what we did above. In fact, the changes above probably seem more natural. At least they do until we start to try make things more efficient by memoizing our approach with a lookup table. We'll see why the changes above are not preferred soon.

Our new recursive approach looks much better than our first attempt. The parameters are clean enough now that we can animate the recursive calls. For the small set , let's start with an example where there's no solution, specifically for sum :

For the top-numbered node (6 on top and 46 beneath), the 6 means that we can use any of the first six integers from our set (i.e., the entire set), and 46 is the target sum. Even with the small set S above, we can see that the tree already starts to look a bit big. On the other hand, the code is written so that once we see the answer is true, it shortcuts back to the root. So for the true instance with target sum , the tree is much smaller:

Memoized version

When we do our parameter analysis in service of trying to come up with a memoized version, it's much better than before. We have a target sum parameter that can go up to K, the set S tells us all the integers we can use to start the problem, and the other parameter, lastIndex, specifies how much of the set S we can use for our problem where we use the head of the set, where it can go up to the length of the array.

Now that our parameters are more under control, we can memoize our recursive program:

SubsetSum(S[1...n], K)

allocate Ans[1...n][1...K] = NIL everywhere

return SubsetSumRecursive(S, n, K, Ans)

SubsetSumRecursive(S[1...n], lastIndex, k, Ans[1...n][1...K])

if (k == 0)

return true

if (k < 0 or lastIndex == 0)

return false

if (Ans[lastIndex][k] != NIL)

return Ans[lastIndex][k]

for (i = 1; i <= lastIndex; i++)

if (SubsetSumRecursive(S, i - 1, k - S[i], Ans))

return Ans[lastIndex][k] = true

return Ans[lastIndex][k] = false

Changes from our original recursive program are highlighted above. The clear change to the code comes with the incorporation of the Ans table. So it's worth pondering why that table is the way it is, especially if we ever want to have any hope of coming up with this kind of solution for ourselves one day. What is the Ans table exactly? What does it tell us? The allocation Ans[1...n][1...K] = NIL everywhere indicates the Ans table has n rows and K columns, where all cells are initialized to a null value, NIL. But what does this mean? What are the different entries supposed to tell us?

For example, suppose we look at cell Ans[p][q]. Notationally, what does Ans[p][q] even mean? What is this entry supposed to tell us? It's supposed to tell us whether or not it's possible to use elements [1, ... , p] of S (i.e., the first p elements) to get a sum of q. Hence, Ans[p][q] should only hold one of three possible values:

NIL: We don't yet know if it's possible to obtain the sumqfrom the firstpelements ofS(line2of the pseudocode).true: It is possible to obtain the sumqfrom the firstpelements ofS(line14of the pseudocode).false: It is not possible to obtain the sumqfrom the firstpelements ofS(line15of the pseudocode).

We could handle our base cases just as before, but we can make use of a clever observation often used in DP implementations to streamline things: it's common to include an extra row(s) and/or column(s) in the memoization table to handle base cases. What would this possibly look like for this particular problem? Our base cases involve sums of 0 (always possible to achieve with an empty subset) or subsets of size 0 (never possible to obtain a positive subset sum of K > 0 from an empty subset).

Right now our memoization table, Ans, has |S| rows and K columns:

1 2 3 4 ... K

1 ? ? ? ? ?

2 ? ? ? ? ?

.

.

.

n ? ? ? ? ?

But remember: the entry Ans[p][q] is supposed to tell us whether or not it's possible to use the first p elements of S to come up with a subset sum of q. What if we added a row and column to our memoization table for the base cases? That is, we can add a row at the beginning to indicate subsets of size 0 (i.e., zero elements of S can still be used), and we can similarly add a column at the beginning to indicate a sum of 0:

0 1 2 3 4 ... K

0 ✓ x x x x x

1 ✓ ? ? ? ? ?

2 ✓ ? ? ? ? ?

.

.

.

n ✓ ? ? ? ? ?

Note how the configuration above effectively handles all base cases: it will always be possible to achieve a subset sum of 0 no matter what our subset looks like (thus column 0 is filled with true values, indicated by ✓ marks above), and we will never be able to have a subset sum of K > 0 when our subset is empty (thus all of row 0 is filled with false values, indicated by x marks above, except column 0 in this row because an empty subset does have a sum of 0).

If we want to incorporate the base cases into the memoization table, then it's important to note that our memoization table must have |S| + 1 rows and K + 1 columns no matter what indexing scheme we use for S. The rationale behind choosing 1-indexing for S is probably clearer now from a presentational standpoint — the indexing for the memoization table will now perfectly align with how S is indexed; for example, row x of Ans will correspond to item at index x in S.

If, instead, we used 0-indexing for S, then it would be a bit more presentationally awkward to recognize that row x of Ans would now correspond to item at index x - 1 in S. For example, row 1 of Ans would now correspond to item at index 1 - 1 = 0 in S. This is because we still want to use the first row of our memoization table for base cases. But now the presentation becomes a bit more sloppy.

Of course, if we actually implement our algorithm in code, then we really don't have a choice when it comes to indexing. For example, Python inherently uses 0-indexing. Thus, we just need to be a bit careful when taking care of the implementation details.

If we do as indicated above and "smuggle the base cases into our memoization table", then we have two allocations to make:

Ans[0...n][0]should be marked astrueeverywhere; that is, all|S|+1subsets, including the empty set, can achieve a subset sum of0Ans[0][1...K]should be marked asfalseeverywhere; that is, achieving a subset sum of any positive value1throughK, inclusive, is impossible for an empty subset

With everything above in mind, we can write some new and improved pseudocode:

SubsetSum(S[1...n], K)

allocate Ans[0...n][0...K] = NIL everywhere

Ans[0...n][0] = true everywhere

Ans[0][1...K] = false everywhere

return SubsetSumRecursive(S, n, K, Ans)

SubsetSumRecursive(S[1...n], lastIndex, k, Ans[0...n][0...K])

if (k < 0)

return false

if (Ans[lastIndex][k] != NIL)

return Ans[lastIndex][k]

for (i = 1; i <= lastIndex; i++)

if (SubsetSumRecursive(S, i - 1, k - S[i], Ans))

return Ans[lastIndex][k] = true

return Ans[lastIndex][k] = false

The changes above, notably the incorporation of the base cases into our memoization table, will eventually help to make our iterative code look cleaner. We can implement the pseudocode above in Python in the following manner:

Click "Run Code" to see the output here

If we run our code on and , where we know the outcome is false, then our memoization table will be initialized as follows:

Our final memoization table

corresponds to the final recursion tree for our memoized approach:

If we look at the recursion tree for the memoized approach above, then we will see that some parts of the tree get pruned off. For example:

In our example above, the number of items is really small: . There would be a lot more drastic pruning if the set were large with multiple subsets that had equal sums.

Iterative version

If we want to come up with an iterative approach, then we need to analyze our memoization table. To fill an arbitrary box in the table, say row 5 column 40 (i.e., we can use the first five elements of S to try to get a subset sum of 40):

- we can make one recursive call to check a value on each of the rows above it (i.e., to determine whether or not the previous element in

Scan be used as part of the subset sum), where - the column will be something to the left of the current column, depending on the size of the integer we're trying to incorporate into the subset sum (this is because each column corresponds to a subset sum, which can only decrease whenever an element of

Sis incorporated into the subset sum, and this reduced subset sum translates to a column "to the left" because that column represents a smaller subset sum).

We would have something like the following:

Each table location depends on locations above it (up to one per row) and with smaller column indices. Specifically, using the table above as an example, to compute whether the sum 40 can be achieved using the first 5 elements of S, we rely on the results computed for

- smaller subsets (i.e., subsets comprised of the first

5 - 1 = 4elements ofS, which corresponds to all rows above row5in the table) and - smaller sums (i.e., sums of the form

40 - S[p], wherepis thepth element ofSand1 <= p <= 5, which correspond to columns to the left of the current column,40).

In general, "each table location depends on locations above it" means each cell ans[i][subset_sum] depends on ans[idx - 1][subset_sum - S[idx]], where idx stands for item idx of S (hence, 1 <= idx <= i):

The relationship above suggests a natural order for how we should plan to fill our table: as long as we fill the table using an order that fills everything above and to the left of a square before we get to it, then we will be fine. If we start filling the table from the top left, then we can fill the table in one of two ways to ensure the fill order referenced above is respected:

- Starting from the top, fill a row left to right. Then proceed to the next row. That is, we iterate row by row: for each element

iinS, we iterate over allsubset_sumpossibilities from1throughK. - Starting from the left, fill a column top to bottom. Then proceed to the next column. That is, we iterate column by column: for each

subset_sumpossibility from1throughKwe iterate over each elementiinS.

Both approaches above work, but it's arguably easier to follow the row by row approach because it allows us to clearly see the relationship between the current row and all rows above it. It also makes it easier for us to observe a sort of "trailing" effect: the inclusion of each new element S[i] influences the ability to form new sums based on previous sums achievable with fewer elements:

Table start state

As can be seen in the images above, rows trailing the current row will always have at least as many true values as the current row in the same cells because once a subset sum is achievable with x elements, the same subset sum must be achievable by considering the same x elements in addition to other elements: x + 1 elements, x + 2 elements, etc. In the images above, this behavior is illustrated by highlighting columns in red once the subset sum represented by that column index has been achieved — that column will always be red as we consider more and more elements from S.

Note that filling in rows in the table gets progressively slower: each row fill is slower than the one before it. Why? Because as we move to rows corresponding to higher indices (i increases), the computation for each cell becomes more complex because we're considering more elements (elements 1 through i of S).

At this point, we've got our base cases, we've decided we want to fill our table row by row, and we've determined that table cell ans[i][subset_sum] depends on ans[idx - 1][subset_sum - S[idx]] for 1 <= idx <= i for any given i, where 1 <= i <= |S|. But we still don't have any code! We need to consider what updating our table actually looks like from an implementation standpoint. Right now it's probably not too difficult to conceive of the following pseudocode:

SubsetSum(S[1...n], K)

allocate Ans[0...n][0...K] = NIL everywhere

Ans[0...n][0] = true everywhere

Ans[0][1...K] = false everywhere

for (i = 1; i <= n; i++)

for (subset_sum = 1; subset_sum <= K; subset_sum++)

? ans[i][subset_sum] ... what should go here ?

return Ans[n][K]

Let's address the lines in our pseudocode above:

- Line

2: Ouranstable is allocated. - Line

3: A subset sum of0is achievable for any subset (base cases). - Line

4: A positive subset sum is never achievable when the subset is empty (base cases). - Lines

5-6: For each elementiinS, we iterate over allsubset_sumpossibilities1throughK(i.e., we process our table row by row). - Line

7: This is the main hang up. We need to figure out how to effectively updateans[i][subset_sum]here. - Line

8: We return whether or not it's possible to achieve the subset sumKusing allnvalues fromS.

Our remaining work for the iterative implementation clearly revolves around how to effectively handle line 7 in the pseudocode above. At that point in the implementation, we need to specify a logically sound way of updating the entry for ans[i][subset_sum] in the table; that is, should the entry ans[i][subset_sum] in the table be set to true or to false? Of course, it depends:

false: For whateverivalue we're on (i.e., up to the firstivalues ofS), if it's not possible to achievesubset_sumwith the firstiitems ofS, thenans[i][subset_sum]should be set tofalse. But we still need to consider the firstiitems ofSin order to make this determination; that is, for eachivalue of the outer for loop (line5), we need to consider the first, second, third, ... ,i - 1st, andith items ofS. Letcurr_idxindicate the index of the value inSwe're currently considering, where1 <= curr_idx <= i. When should itemcurr_idxinSbe included as part of the subset sum? It may be easier to specify when itemcurr_idxofScan't be part of the subset sum:-

curr_idx > i: This is mostly due to the mechanics of how we've set up our process; that is, we're only considering the firstielements ofSonce we hit line7. We don't want to access elements beyond that at this point in the process; thus, the condition1 <= curr_idx <= ineeds to be respected. -

S[curr_idx] > subset_sum: If the current element is larger than the target subset sum, then we certainly cannot include it to form the subset sum (because it would exceed the sum we are trying to achieve). Hence, we would need to skip this element and consider the next element,curr_idx = curr_idx + 1, so long as the conditioncurr_idx <= iis respected for the newcurr_idxvalue. -

ans[curr_idx - 1][subset_sum - S[curr_idx]] == false: This is perhaps a bit harder to see at first, but it's absolutely critical and goes back to one of our earlier observations (reproduced below for ease of reference):That is, if

ans[curr_idx - 1][subset_sum - S[curr_idx]]isfalse, then this means we cannot achieve the remaining sum using the firstcurr_idx - 1elements; hence, includingS[curr_idx]will not help us achieve the targetsubset_sum:We would, again, need to skip this element and consider the next element,

curr_idx = curr_idx + 1, so long as the conditioncurr_idx <= iis respected for the newcurr_idxvalue.

-

true: This isn't so hard in light of the considerations and analysis above. Whencurr_idx <= iandS[curr_idx]can be included to achievesubset_sum, then we setans[i][subset_sum]to betrue.

Essentially, we need to keep skipping items in S that satisfy the criteria for the false bullet points above until either we've skipped all the elements we can skip or we have found an element S[curr_idx] such that it's less than or equal to subset_sum, and the remaining sum, subset_sum - S[curr_idx], can be achieved with the previous elements curr_idx <= i:

SubsetSum(S[1...n], K)

allocate Ans[0...n][0...K] = NIL everywhere

Ans[0...n][0] = true everywhere

Ans[0][1...K] = false everywhere

for (i = 1; i <= n; i++)

for (subset_sum = 1; subset_sum <= K; subset_sum++)

curr_idx = 1

while(curr_idx <= i and (S[curr_idx] > subset_sum or !Ans[curr_idx - 1][subset_sum - S[curr_idx]]))

curr_idx++

Ans[i][j] = (curr_idx <= i) (true iff we stopped early due to seeing true value)

return Ans[n][K]

Note that a cell is marked as true if any of the other cells it depends on is marked as true; that is, the cell ans[i][subset_sum] is set to true if any of the possible previous states lead to the sum subset_sum. Specifically, if there's any curr_idx (from 1 to i) such that ans[curr_idx - 1][subset_sum - S[curr_idx]] is true and S[curr_idx] can be included (i.e., S[curr_idx] <= subset_sum), then ans[i][subset_sum] is true. This represents the "logical OR" of all possible ways to achieve the sum subset_sum with the first i elements.

Before we try implementing the pseudocode above in Python, it's worth observing that we can make a small optimization to how our code is currently structured: as we noted in the previous illustrations as we progressed row by row, there are necessarily more true locations on the higher indexed rows. That is, as we consider more elements (i.e., higher i), we can form more combinations of sums. This increases the likelihood that a sum subset_sum can be achieved; thus, the number of true entries in the ans table usually increases in the lower rows (i.e., higher i-values). Consequently, if we check the higher indexed rows first by starting with higher curr_idx values in the while loop (i.e., considering elements with higher indices first), then we are more likely to find a true condition earlier, allowing us to exit the loop sooner. This "true shortcut" is a minor optimization that lets us reduce unnecessary iterations. We can incorporate this simple change into our pseudocode as follows:

SubsetSum(S[1...n], K)

allocate Ans[0...n][0...K] = NIL everywhere

Ans[0...n][0] = true everywhere

Ans[0][1...K] = false everywhere

for (i = 1; i <= n; i++)

for (subset_sum = 1; subset_sum <= K; subset_sum++)

curr_idx = i

while(curr_idx > 0 and (S[curr_idx] > subset_sum or !Ans[curr_idx - 1][subset_sum - S[curr_idx]]))

curr_idx--

Ans[i][j] = (curr_idx > 0) (true iff we stopped early due to seeing true value)

return Ans[n][K]

We can implement the pseudocode above in Python in the following manner:

Click "Run Code" to see the output here

Once it's all said and done, note that our ans table uses space and has a worst-case runtime of .

Space optimization

At this point, if we return to our DP template, then we will see that our final considerations are only "garnish" steps: determine if space optimization is possible and then whether or not we need to store some extra information in order to possibly reconstruct an answer (the actual subset that sums to the given subset sum if one exists for this problem).

Is it possible for us to optimize for space in our iterative solution? Recall that, when filling the current cell in our table, we might have to look at one value from each row above the current cell:

So it doesn't seem like we can get rid of any of the rows. But maybe we don't need all of the columns. For example, if we're thinking about using the integer 6 from the set we've been discussing, , then we need to check 6 columns to the left, but we wouldn't need to keep anything to the left of that (i.e., for the row corresponding to integer 6, which is the third item in S):

In general, row i requires S[i] columns to the left of the current location. We could handle this on a row by row basis, where we treated each row separately: space. Or, if the maximum positive integer in S isn't too big, then we could easily decrease to keep columns for space. The decreased space versions would be filled column by column.

Reconstructing the set?

How about any extra information we need to keep in order to reconstruct the table? Even though the table goes up to 46 since 46 is the given subset sum, we can ask questions about how to fill other possible target sums like 45, which we know is a subset sum achievable given the set S. But which subset(s) actually have values that sum to 45? Specifically, how did the "row 6 column 45" get filled in as true? We can highlight some of the table cells this particular cell depends on (i.e., how we could have possibly reached row ans[6][45] from previous cells):

The graphic above may be a bit difficult to understand at first. Essentially, we're trying to highlight all cells in the table that could have possibly been used to get to ans[6][45]. It becomes a bit clearer if we actually show the calculations and how this last cell depends on previous cells:

The boxes highlighted in red above indicate cells from which we could not possibly have gotten to row 6, column 45. What about the green boxes? Why is it possible for there to be more than one? The fact that we could get to row 6, column 45 from any of the three highlighted squares is because there's more than one way to get a subset sum of 45 from the elements in S. Maybe we can use all of this information to figure out what extra information is needed to reconstruct the appropriate subset.

Let's think carefully about what the cell entry of ✓ at ans[6][45] actually means for a moment: using some of the first 6 integers in the set S, we can get a subset sum of 45. If we let T[x, y] denote the true/false value for the cell ans[x][y], then we can capture the essence of the previous photo with the following determination for T[6, 45] (the following is the "logical OR" of all possible ways to achieve the sum 45 with the first 6 elements, as remarked on previously):

T[6, 45] = T[5, 45 - S[6]] or T[4, 45 - S[5]] or T[3, 45 - S[4]] or

T[2, 45 - S[3]] or T[1, 45 - S[2]] or T[0, 45 - S[1]]

Each T[x, y] above is a square we need to check, but even without checking all of those squares, we kind of know T[6, 45] must be true if we look at the box right above it, T[5, 45]. We observed this kind of pattern previously when developing the iterative version and closely observing how the memoization table was being filled, row by row:

Table start state

Specifically, if a subset of the first 5 integers sums to 45, then of course some subset of the first 6 integers does too — if we go down any column from the top, once it turns true (i.e., has a cell entry of ✓), then it has to stay true because adding extra potential integers to use doesn't force us to use them (this is illustrated with the columns highlighted red in the images above).

What previous states/cells does the state/cell of T[5, 45] depend on? It looks almost the same as that for T[6, 45]:

T[5, 45] = T[4, 45 - S[5]] or T[3, 45 - S[4]] or

T[2, 45 - S[3]] or T[1, 45 - S[2]] or T[0, 45 - S[1]]

The only difference is that now the state/cell/box T[5, 45 - S[6]] = T[5, 41] has been removed from consideration. Let's compare the determinations for T[6, 45] and T[5, 45], one above the other:

T[6, 45] = T[5, 45 - S[6]] or T[4, 45 - S[5]] or T[3, 45 - S[4]] or

T[2, 45 - S[3]] or T[1, 45 - S[2]] or T[0, 45 - S[1]]

T[5, 45] = T[4, 45 - S[5]] or T[3, 45 - S[4]] or

T[2, 45 - S[3]] or T[1, 45 - S[2]] or T[0, 45 - S[1]]

The situation above shows that, by substitution, we have T[6, 45] = T[5, 45 - S[6]] or T[5, 45]. This naturally suggests a major optimization: when looking at T[6, 45], instead of checking the five other locations

T[4, 45 - S[5]], T[3, 45 - S[4]], T[2, 45 - S[3]], T[1, 45 - S[2]], T[0, 45 - S[1]]

we should just check one:

T[5, 45]

Hence, when looking at T[6, 45], we should really just check two locations in total:

T[6, 45] = T[5, 45 - S[6]] or T[5, 45]

Our illustration can be updated to reflect this improvement:

More importantly, however, the pattern noticed above doesn't just apply to our specific example but to all sorts of assessments we could hope to make from our memoization table:

T[i, j] = T[i - 1, j - S[i]] or T[i - 1, j]

That is:

It's really easy to miss the observation above in terms of speeding up the algorithm, especially if we start down the path we did for this second recursive approach (i.e., instead of what will be our third and final approach). When we couldn't find a DP algorithm for our first recursive approach (because the number of distinct subsets we were considering would be exponential, and DP algorithms need a reasonably small set of distinct calls to make), it forced us to change directions. We were stuck and there wasn't much choice.

This is much harder here. The recursive and DP programs we came up with work fine. But by fully exploring the problem, there's a subtle clue towards a better algorithm (i.e., the clue we discovered above). In this case, since we're so far into the effort of solving this problem, we could try to incorporate the observation above directly into what we've done so far to get a final answer quickly. But it's worth noticing that a different recursive idea could have sent us to what will be our final answer more directly.

Recursive idea 3

Let's recall the problem at hand for a fresh start:

- Given: A set of positive integers and a positive integer .

- Answer: True if and only if there exists a subset of that sums to .

We've see the following two recursive ideas for trying to work out how to solve this problem effectively:

- If true, what if we know one value in the subset?

- If is in the subset, then solve .

- Try all possible .

- What if we knew the maximum index from used in the subset for the subset sum?

- If is the highest index used, then solve .

- Try all possible .

As shown above, the second approach was based on the following question: What is the highest indexed item that we used in our subset to achieve the subset sum? We tried all possible values, and we worked out a recursive solution to each. But our clue led us to what is a simpler question: Do we need the last integer in S? That is, do we actually use the last integer in S as part of our subset solution? We have two choices: we don't use it or we do. If we don't use it, then we should solve S[1, ... , n - 1], K; if we do use it, then we should solve S[1, ... , n - 1], K - S[i]. Either way, we're left with a recursive problem with one fewer elements in our set (i.e., 1 ... n - 1 instead of 1 ... n) and one of two different possible target summation values (i.e., K or K - S[i]). This clue that we noted at the end of the previous section gives us a simpler recursive algorithm than before:

SubsetSum(S[1...n], K)

return SubsetSumRecursive(S, n, K)

SubsetSumRecursive(S[1...n], lastIndex, k)

if (k == 0)

return true

if (k < 0 or lastIndex == 0)

return false

return SubsetSumRecursive(S, i - 1, k) or SubsetSumRecursive(S, i - 1, k - S[i]) or

Of course, we could make the recursive calls above in either order (just as we did when calculating the Fibonacci numbers).

For the true instance where S = {17, 22, 6, 4, 2, 4}, K = 45, if we recursively first try throwing the last item away and creating the target sum with whatever's left for our subset (i.e., if we call SubsetSumRecursive(S, i - 1, k) first and SubsetSumRecursive(S, i - 1, k - S[i]) second), then we only see a little bit of how big the recursion tree can be:

This is because once any recursive call hits a true base case, then the recursion unwinds to return true to the root of the tree. But let's consider what happens if we flip the order of the recursive calls and call SubsetSumRecursive(S, i - 1, k - S[i]) first instead (i.e., we recursively first try to add the last integer to be part of the subset):

The recursion tree above shows we also find an answer quickly, but what we end up with is a different subset because there's more than one subset with that sum.

We can look at the check marks in the recursion trees above to see which recursive call order results in which subset (start from the base case leaf nodes and work up the tree to the root, following the check marks along the way):

0,0

1,17 # include 1 (17)

2,39 # include 2 (22)

3,45 # include 3 (6)

4,45 # don't include 4

5,45 # don't include 5

6,45 # don't include 6

17 + 22 + 6 = 45

0,0

1,17 # include 1 (17)

2,39 # include 2 (22)

3,39 # don't include 3

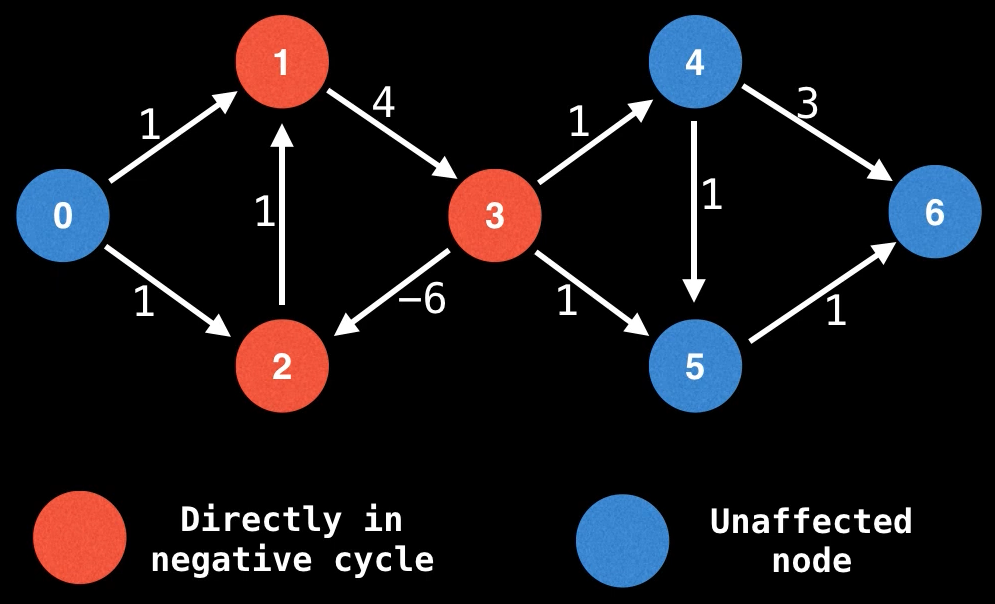

4,39 # don't include 4